🚀 Deformable DETR model trained on LVIS

A Deformable Detection Transformer (DETR) model trained on LVIS dataset with 1203 classes, offering high - precision object detection.

🚀 Quick Start

Deformable DETR is a powerful object - detection model trained on LVIS. It was introduced in the paper Detecting Twenty - thousand Classes using Image - level Supervision by Zhou et al. and first released in this repository. This model corresponds to the "Box - Supervised_DeformDETR_R50_4x" checkpoint from the original repository.

Disclaimer: The team releasing Detic did not write a model card for this model, so this model card is written by the Hugging Face team.

✨ Features

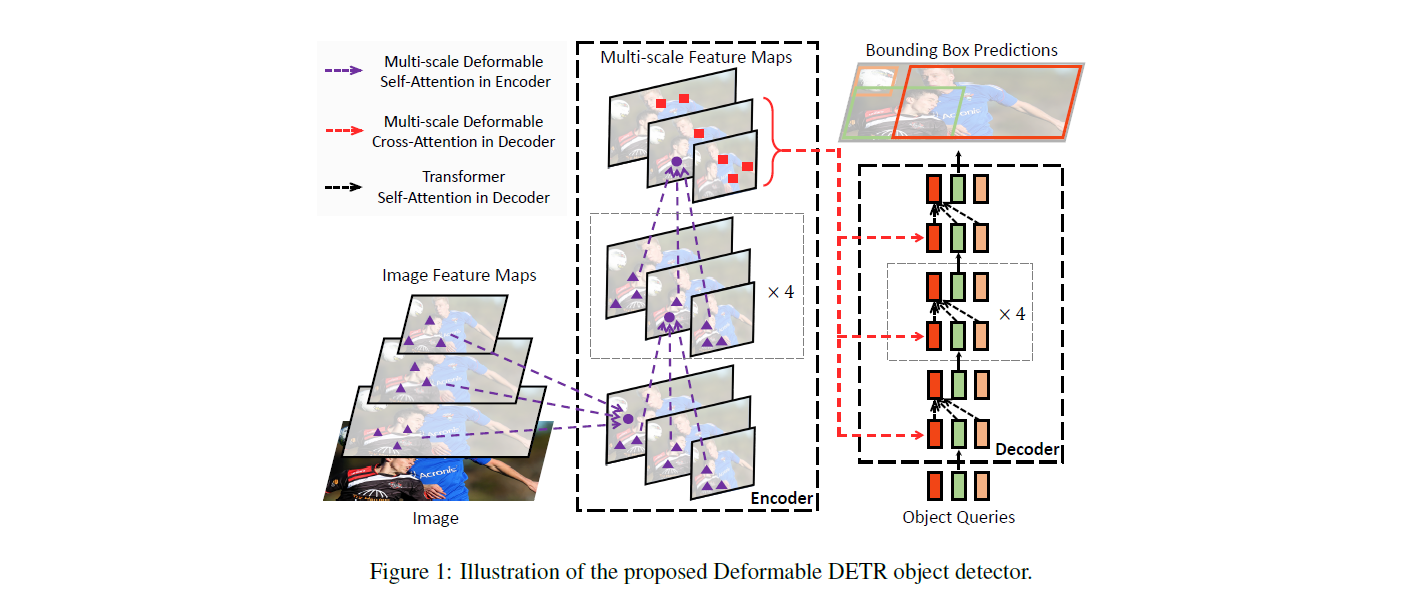

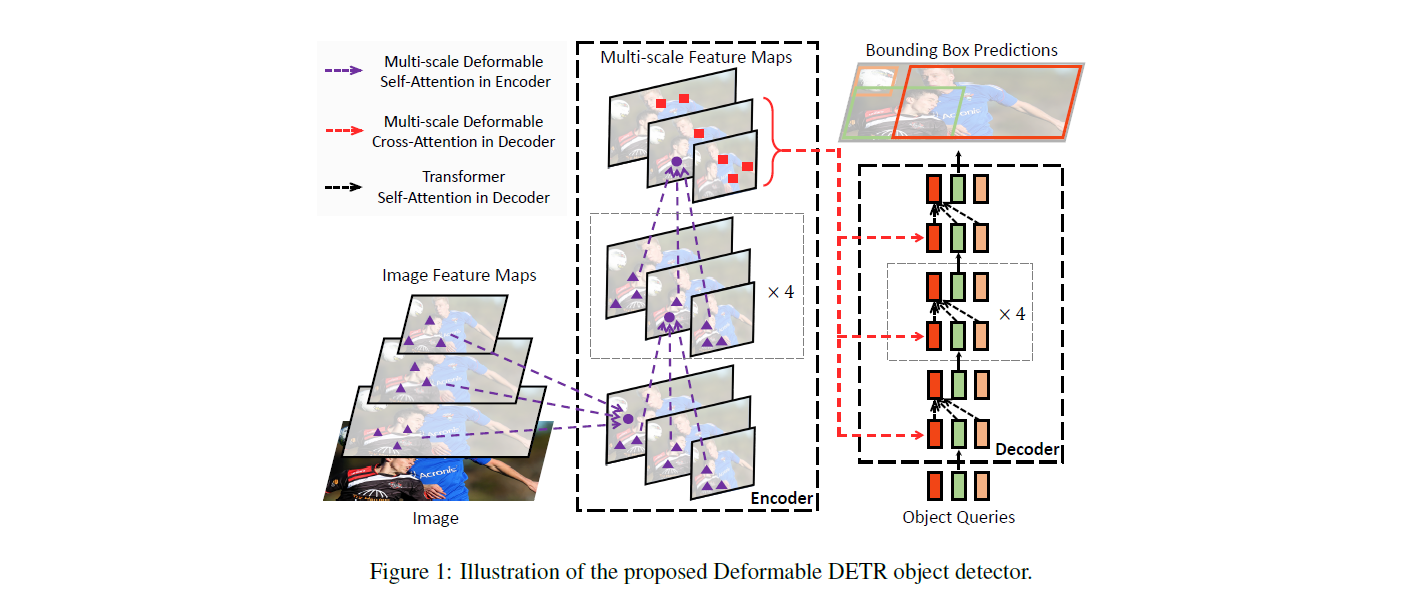

- Encoder - Decoder Architecture: The DETR model is an encoder - decoder transformer with a convolutional backbone.

- Object Queries: It uses object queries to detect objects in an image. For COCO, the number of object queries is set to 100.

- Bipartite Matching Loss: Trained using a "bipartite matching loss" with the Hungarian matching algorithm for optimal one - to - one mapping.

📚 Documentation

Model description

The DETR model is an encoder - decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs for object detection: a linear layer for class labels and a MLP (multi - layer perceptron) for bounding boxes. The model uses object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N. The Hungarian matching algorithm is used to create an optimal one - to - one mapping between each of the N queries and each of the N annotations. Next, standard cross - entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

Intended uses & limitations

You can use the raw model for object detection. See the model hub to look for all available Deformable DETR models.

💻 Usage Examples

Basic Usage

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("facebook/deformable-detr-box-supervised")

model = DeformableDetrForObjectDetection.from_pretrained("facebook/deformable-detr-box-supervised")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

🔧 Technical Details

Evaluation results

This model achieves 31.7 box mAP and 21.4 mAP (rare classes) on LVIS.

BibTeX entry and citation info

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

📄 License

This model is licensed under the Apache 2.0 license.

| Property |

Details |

| Model Type |

Deformable DETR model trained on LVIS |

| Training Data |

LVIS dataset with 1203 classes |

| Widget Examples |

Savanna, Football Match, Airport (with corresponding image URLs) |