Metaclip B32 Fullcc2.5b

MetaCLIP is a vision-language model trained on 2.5 billion data points from CommonCrawl (CC) to construct a shared image-text embedding space.

Downloads 413

Release Time : 10/7/2023

Model Overview

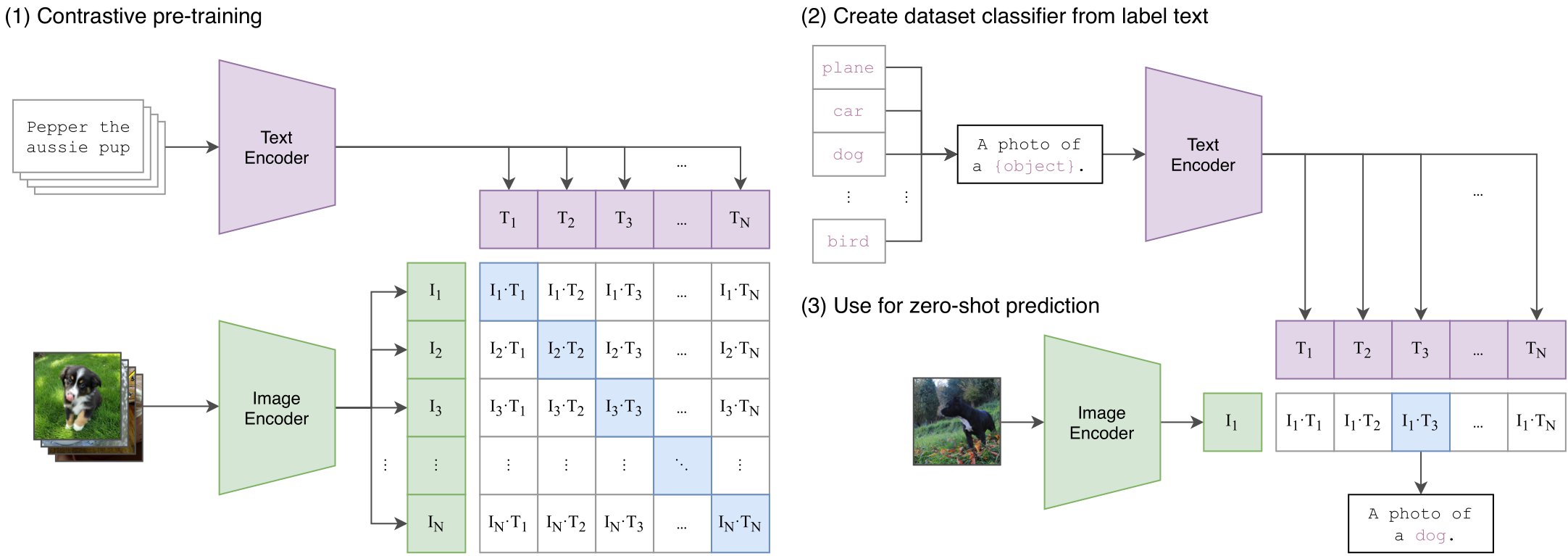

Developed by Meta's team, this model aims to reveal CLIP's training data filtering methods and supports tasks like zero-shot image classification and text-based image retrieval.

Model Features

Large-scale training data

Trained on 2.5 billion data points from CommonCrawl, covering a wide range of visual concepts

Open data process

First public disclosure of data filtering methods for CLIP-like models, improving transparency

Multimodal embedding space

Constructs a unified image-text embedding space supporting cross-modal retrieval

Model Capabilities

Zero-shot image classification

Text-based image retrieval

Image-based text retrieval

Cross-modal feature extraction

Use Cases

Content retrieval

Image search engine

Retrieve relevant images using natural language descriptions

Intelligent classification

Zero-shot image classification

Classify images of new categories without specific training

Featured Recommended AI Models