Metaclip B16 400m

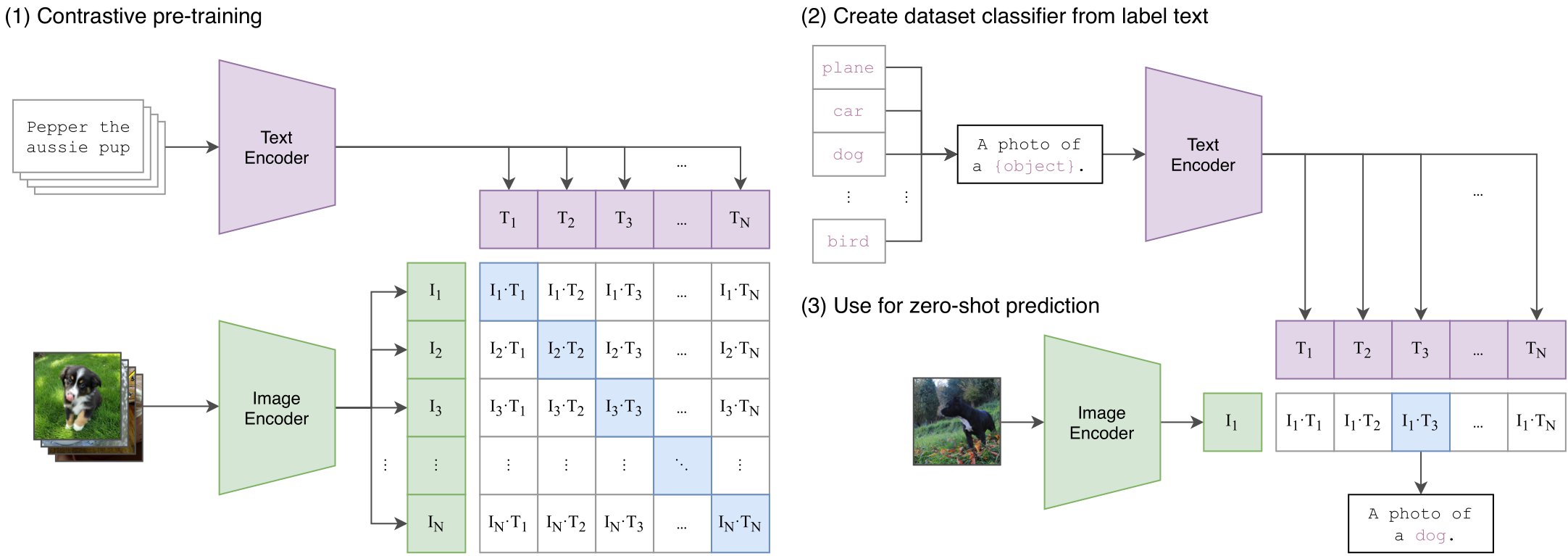

MetaCLIP is a vision-language model trained on CommonCrawl data for constructing shared image-text embedding spaces

Downloads 51

Release Time : 10/9/2023

Model Overview

This model applies the MetaCLIP framework to 400 million data points from CommonCrawl to reveal CLIP training data selection methods, supporting cross-modal understanding between images and text

Model Features

Public data training

Trained on the open CommonCrawl dataset with high data transparency

Cross-modal understanding

Can process both visual and textual information to establish shared embedding spaces

Zero-shot learning

Capable of performing new tasks without task-specific training

Model Capabilities

Zero-shot image classification

Text-based image retrieval

Image-based text retrieval

Cross-modal feature extraction

Use Cases

Content retrieval

Image search engine

Retrieve relevant images using natural language descriptions

Intelligent labeling

Automatic image tagging

Generate descriptive labels for unlabeled images

Featured Recommended AI Models