Metaclip L14 Fullcc2.5b

MetaCLIP is a large-scale vision-language model trained on 2.5 billion data points from CommonCrawl (CC), revealing CLIP's data filtering methodology

Downloads 172

Release Time : 10/9/2023

Model Overview

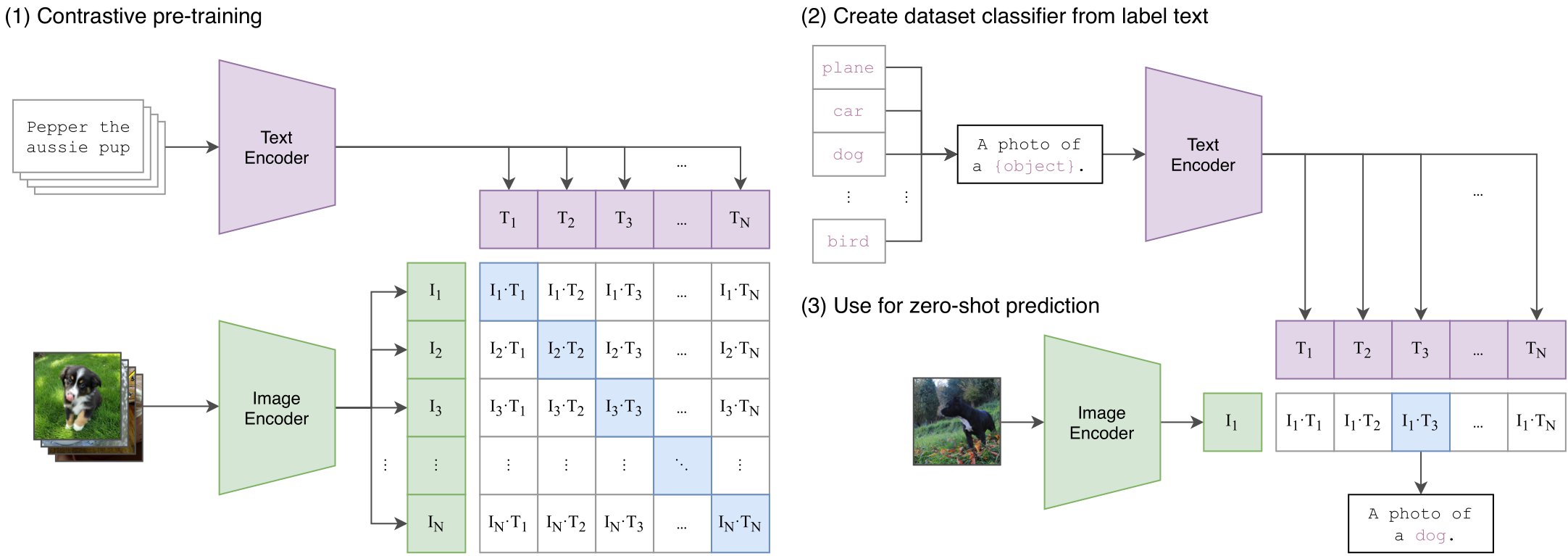

This model constructs a shared image-text embedding space, supporting tasks like zero-shot image classification and cross-modal retrieval

Model Features

Data Decryption Technology

Reveals CLIP's training data filtering methodology, filling technical gaps left undisclosed by OpenAI

Large-scale Training

Trained on 2.5 billion data points from CommonCrawl, covering extensive visual concepts

High-resolution Processing

Supports 14×14 image patch resolution, preserving more visual details

Model Capabilities

Zero-shot image classification

Text-based image retrieval

Image-based text retrieval

Cross-modal feature extraction

Use Cases

Content Retrieval

Multimodal Search Engine

Retrieves relevant image content through natural language queries

Intelligent Classification

Zero-shot Image Classification

Recognizes new categories without requiring specific training data

Featured Recommended AI Models

CLIP high-level overview. Taken from the

CLIP high-level overview. Taken from the