Model Overview

Model Features

Model Capabilities

Use Cases

🚀 CogVideoX-Fun

CogVideoX-Fun is a modified pipeline based on the CogVideoX structure. It offers more flexible generation conditions and can be used for generating AI images and videos, as well as training baseline and Lora models for Diffusion Transformer.

🚀 Quick Start

1. Cloud Usage: AliyunDSW/Docker

a. Via Alibaba Cloud DSW

DSW provides free GPU usage time. Users can apply for it once, and the application is valid for 3 months after approval.

Alibaba Cloud offers free GPU time on Freetier. After obtaining it, you can start CogVideoX-Fun within 5 minutes on Alibaba Cloud PAI-DSW.

b. Via ComfyUI

Our ComfyUI interface is as follows. For details, please refer to ComfyUI README.

c. Via Docker

If you use Docker, make sure that the GPU driver and CUDA environment are correctly installed on your machine. Then, execute the following commands in sequence:

# pull image

docker pull mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

# enter image

docker run -it -p 7860:7860 --network host --gpus all --security-opt seccomp:unconfined --shm-size 200g mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

# clone code

git clone https://github.com/aigc-apps/CogVideoX-Fun.git

# enter CogVideoX-Fun's dir

cd CogVideoX-Fun

# download weights

mkdir models/Diffusion_Transformer

mkdir models/Personalized_Model

wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/cogvideox_fun/Diffusion_Transformer/CogVideoX-Fun-2b-InP.tar.gz -O models/Diffusion_Transformer/CogVideoX-Fun-2b-InP.tar.gz

cd models/Diffusion_Transformer/

tar -xvf CogVideoX-Fun-2b-InP.tar.gz

cd ../../

2. Local Installation: Environment Check/Download/Installation

a. Environment Check

We have verified that CogVideoX-Fun can be executed in the following environments:

Details for Windows:

- Operating System: Windows 10

- Python: python3.10 & python3.11

- PyTorch: torch2.2.0

- CUDA: 11.8 & 12.1

- CUDNN: 8+

- GPU: Nvidia-3060 12G & Nvidia-3090 24G

Details for Linux:

- Operating System: Ubuntu 20.04, CentOS

- Python: python3.10 & python3.11

- PyTorch: torch2.2.0

- CUDA: 11.8 & 12.1

- CUDNN: 8+

- GPU: Nvidia-V100 16G & Nvidia-A10 24G & Nvidia-A100 40G & Nvidia-A100 80G

You need approximately 60GB of available disk space. Please check!

b. Weight Placement

It is recommended to place the weights in the specified paths:

📦 models/

├── 📂 Diffusion_Transformer/

│ ├── 📂 CogVideoX-Fun-2b-InP/

│ └── 📂 CogVideoX-Fun-5b-InP/

├── 📂 Personalized_Model/

│ └── your trained trainformer model / your trained lora model (for UI load)

✨ Features

CogVideoX-Fun is a pipeline modified based on the CogVideoX structure. It is a more flexible version of CogVideoX, which can be used for generating AI images and videos, training baseline models and Lora models for Diffusion Transformer. We support direct prediction from the pre-trained CogVideoX-Fun model to generate videos with different resolutions, about 6 seconds long and 8 fps (1 - 49 frames). Users can also train their own baseline models and Lora models for certain style transformations.

New Features:

- Code Creation! Now supports Windows and Linux. Supports video generation with any resolution from 256x256x49 to 1024x1024x49 for 2B and 5B models. [2024.09.18]

Function Overview:

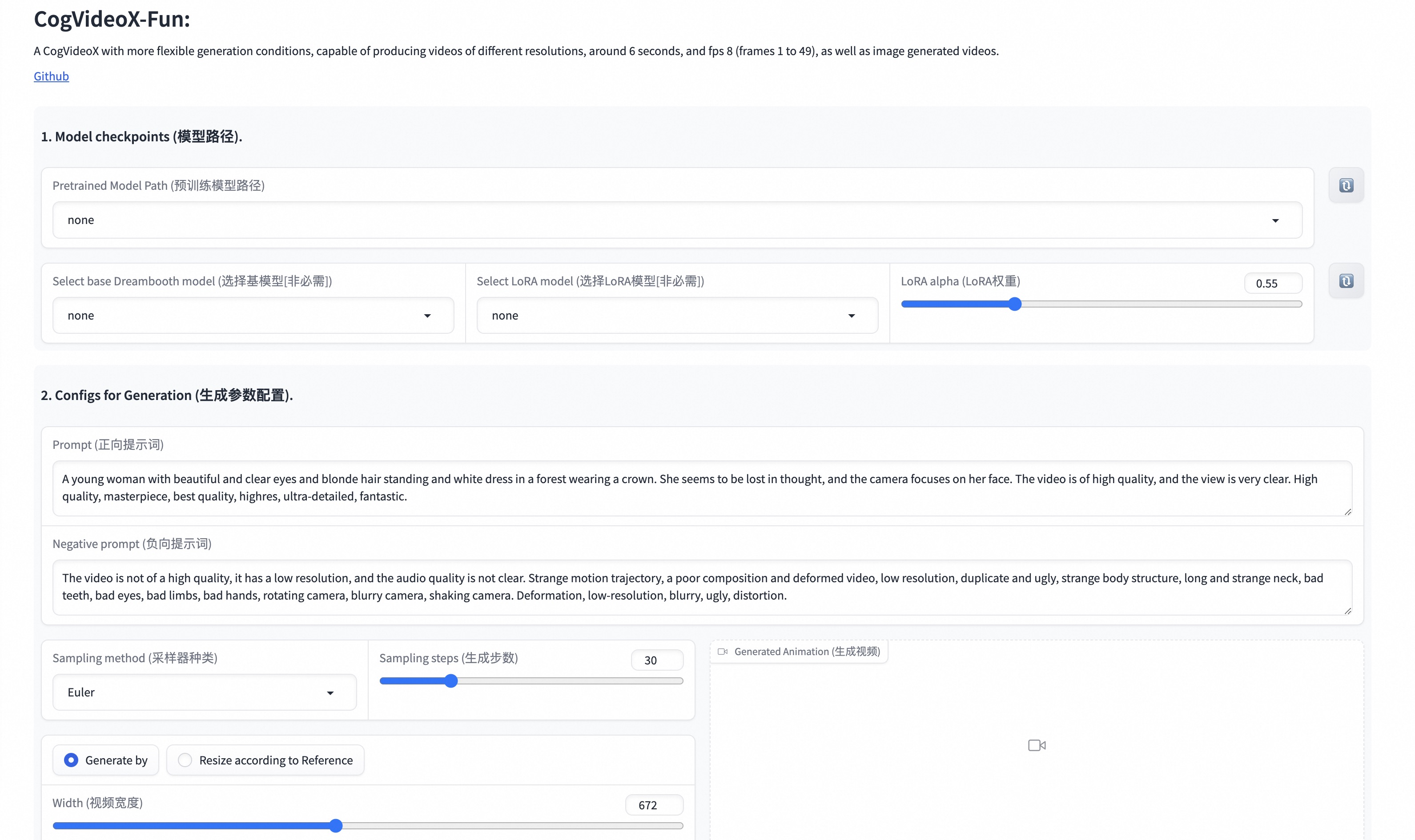

Our UI interface is as follows:

💻 Usage Examples

1. Generation

a. Video Generation

i. Run Python File

- Step 1: Download the corresponding weights and place them in the

modelsfolder. - Step 2: Modify

prompt,neg_prompt,guidance_scale, andseedin thepredict_t2v.pyfile. - Step 3: Run the

predict_t2v.pyfile and wait for the generation result. The result will be saved in thesamples/cogvideox-fun-videos-t2vfolder. - Step 4: If you want to combine other self-trained backbones and Lora, modify

predict_t2v.pyandlora_pathinpredict_t2v.pyas needed.

ii. Through UI Interface

- Step 1: Download the corresponding weights and place them in the

modelsfolder. - Step 2: Run the

app.pyfile and enter the Gradio page. - Step 3: Select the generation model on the page, fill in

prompt,neg_prompt,guidance_scale,seed, etc., click "Generate", and wait for the generation result. The result will be saved in thesamplefolder.

iii. Through ComfyUI

For details, please refer to ComfyUI README.

2. Model Training

A complete CogVideoX-Fun training pipeline should include data preprocessing and Video DiT training.

a. Data Preprocessing

We provide a simple demo for training a Lora model using image data. For details, please refer to wiki.

A complete data preprocessing pipeline for long video segmentation, cleaning, and description can be found in the README of the video caption section.

If you want to train a text-to-video generation model, you need to arrange the dataset in the following format:

📦 project/

├── 📂 datasets/

│ ├── 📂 internal_datasets/

│ ├── 📂 train/

│ │ ├── 📄 00000001.mp4

│ │ ├── 📄 00000002.jpg

│ │ └── 📄 .....

│ └── 📄 json_of_internal_datasets.json

json_of_internal_datasets.json is a standard JSON file. The file_path in the JSON can be set as a relative path, as shown below:

[

{

"file_path": "train/00000001.mp4",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "video"

},

{

"file_path": "train/00000002.jpg",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "image"

},

.....

]

You can also set the path as an absolute path:

[

{

"file_path": "/mnt/data/videos/00000001.mp4",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "video"

},

{

"file_path": "/mnt/data/train/00000001.jpg",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "image"

},

.....

]

b. Video DiT Training

If the data format is set to a relative path during data preprocessing, enter scripts/train.sh and make the following settings:

export DATASET_NAME="datasets/internal_datasets/"

export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

...

train_data_format="normal"

If the data format is an absolute path, enter scripts/train.sh and make the following settings:

export DATASET_NAME=""

export DATASET_META_NAME="/mnt/data/json_of_internal_datasets.json"

Finally, run scripts/train.sh:

sh scripts/train.sh

For details on some parameter settings, please refer to Readme Train and Readme Lora.

📚 Documentation

Video Works

All the displayed results are obtained from image-to-video generation.

CogVideoX-Fun-5B

Resolution-1024

Resolution-768

Resolution-512

CogVideoX-Fun-2B

Resolution-768

Model Address

| Name | Storage Space | Hugging Face | Model Scope | Description |

|---|---|---|---|---|

| CogVideoX-Fun-2b-InP.tar.gz | 9.7 GB before decompression / 13.0 GB after decompression | 🤗Link | 😄Link | Official image-to-video weights. Supports video prediction with multiple resolutions (512, 768, 1024, 1280), trained with 49 frames and 8 fps. |

| CogVideoX-Fun-5b-InP.tar.gz | 16.0GB before decompression / 20.0 GB after decompression | 🤗Link | 😄Link | Official image-to-video weights. Supports video prediction with multiple resolutions (512, 768, 1024, 1280), trained with 49 frames and 8 fps. |

Future Plans

- Support for Chinese.

References

- CogVideo: https://github.com/THUDM/CogVideo/

- EasyAnimate: https://github.com/aigc-apps/EasyAnimate

📄 License

This project is licensed under the Apache License (Version 2.0).

The CogVideoX-2B model (including its corresponding Transformers module and VAE module) is released under the Apache 2.0 License.

The CogVideoX-5B model (Transformer module) is released under the CogVideoX License.

Transformers English

Transformers English Transformers English

Transformers English