🚀 Model description: deberta-v3-large-zeroshot-v2.0

This project focuses on the deberta-v3-large-zeroshot-v2.0 model, which belongs to the zeroshot-v2.0 series. These models are designed for efficient zero-shot classification using the Hugging Face pipeline, capable of performing classification tasks without the need for training data and running on both GPUs and CPUs.

✨ Features

Zeroshot-v2.0 Series Models

- Universal Classification: These models can handle a universal classification task of determining whether a hypothesis is "true" or "not true" given a text (

entailment vs. not_entailment). This task format is based on the Natural Language Inference task (NLI), and any classification task can be reformulated into this task by the Hugging Face pipeline.

- Commercially-Friendly Data: Some models in the

zeroshot-v2.0 series are trained on fully commercially-friendly data, meeting the strict license requirements of users.

Training Data

- Models with "-c": Trained on two types of fully commercially-friendly data:

- Models without "-c": Include a broader mix of training data with a broader mix of licenses, such as ANLI, WANLI, LingNLI, and datasets in this list where

used_in_v1.1==True.

📦 Installation

The model can be used with the Hugging Face transformers library. You can install it using the following command:

💻 Usage Examples

Basic Usage

from transformers import pipeline

text = "Angela Merkel is a politician in Germany and leader of the CDU"

hypothesis_template = "This text is about {}"

classes_verbalized = ["politics", "economy", "entertainment", "environment"]

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-large-zeroshot-v2.0")

output = zeroshot_classifier(text, classes_verbalized, hypothesis_template=hypothesis_template, multi_label=False)

print(output)

multi_label=False forces the model to decide on only one class, while multi_label=True enables the model to choose multiple classes.

📚 Documentation

Metrics

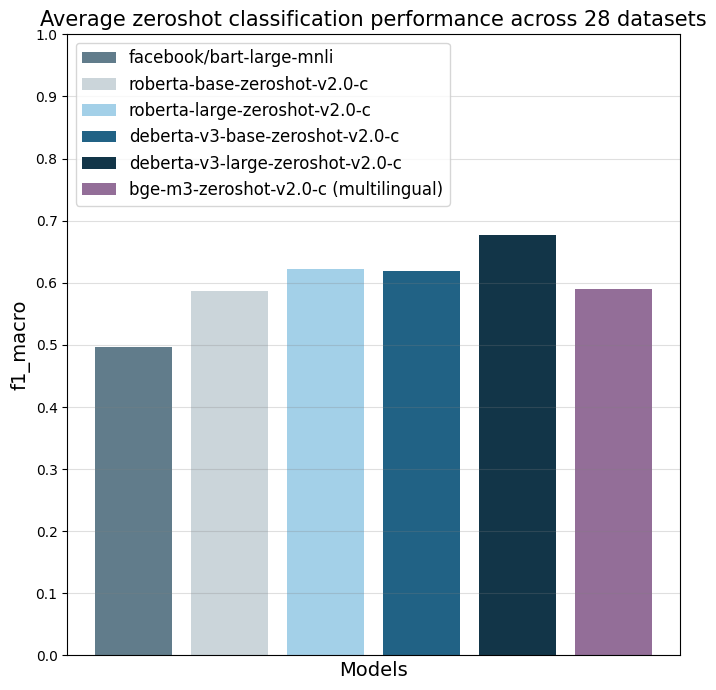

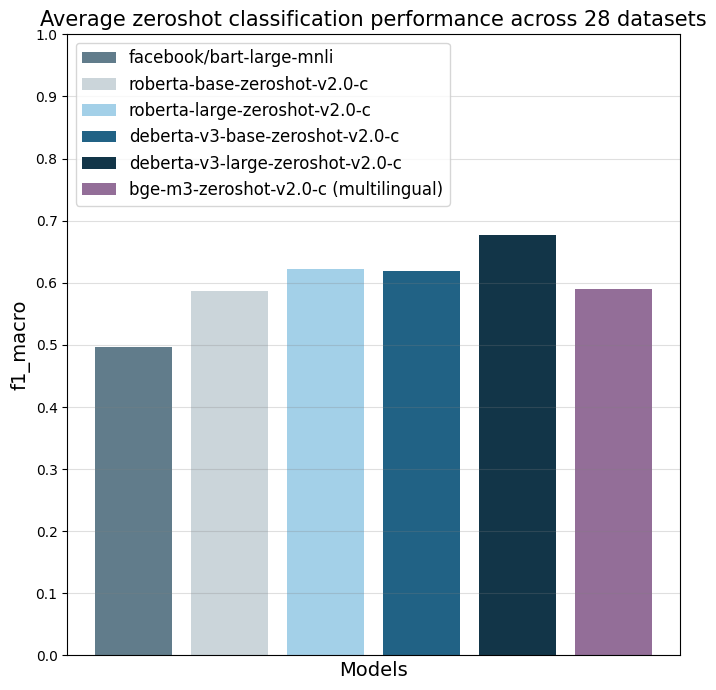

The models were evaluated on 28 different text classification tasks using the f1_macro metric. The main reference point is facebook/bart-large-mnli, which is, at the time of writing (03.04.24), the most used commercially-friendly 0-shot classifier.

| Property |

Details |

| Model Type |

deberta-v3-large-zeroshot-v2.0 |

| Training Data |

See the "Training data" section above |

|

facebook/bart-large-mnli |

roberta-base-zeroshot-v2.0-c |

roberta-large-zeroshot-v2.0-c |

deberta-v3-base-zeroshot-v2.0-c |

deberta-v3-base-zeroshot-v2.0 (fewshot) |

deberta-v3-large-zeroshot-v2.0-c |

deberta-v3-large-zeroshot-v2.0 (fewshot) |

bge-m3-zeroshot-v2.0-c |

bge-m3-zeroshot-v2.0 (fewshot) |

| all datasets mean |

0.497 |

0.587 |

0.622 |

0.619 |

0.643 (0.834) |

0.676 |

0.673 (0.846) |

0.59 |

(0.803) |

| amazonpolarity (2) |

0.937 |

0.924 |

0.951 |

0.937 |

0.943 (0.961) |

0.952 |

0.956 (0.968) |

0.942 |

(0.951) |

| imdb (2) |

0.892 |

0.871 |

0.904 |

0.893 |

0.899 (0.936) |

0.923 |

0.918 (0.958) |

0.873 |

(0.917) |

| appreviews (2) |

0.934 |

0.913 |

0.937 |

0.938 |

0.945 (0.948) |

0.943 |

0.949 (0.962) |

0.932 |

(0.954) |

| yelpreviews (2) |

0.948 |

0.953 |

0.977 |

0.979 |

0.975 (0.989) |

0.988 |

0.985 (0.994) |

0.973 |

(0.978) |

| rottentomatoes (2) |

0.83 |

0.802 |

0.841 |

0.84 |

0.86 (0.902) |

0.869 |

0.868 (0.908) |

0.813 |

(0.866) |

| emotiondair (6) |

0.455 |

0.482 |

0.486 |

0.459 |

0.495 (0.748) |

0.499 |

0.484 (0.688) |

0.453 |

(0.697) |

| emocontext (4) |

0.497 |

0.555 |

0.63 |

0.59 |

0.592 (0.799) |

0.699 |

0.676 (0.81) |

0.61 |

(0.798) |

| empathetic (32) |

0.371 |

0.374 |

0.404 |

0.378 |

0.405 (0.53) |

0.447 |

0.478 (0.555) |

0.387 |

(0.455) |

| financialphrasebank (3) |

0.465 |

0.562 |

0.455 |

0.714 |

0.669 (0.906) |

0.691 |

0.582 (0.913) |

0.504 |

(0.895) |

| banking77 (72) |

0.312 |

0.124 |

0.29 |

0.421 |

0.446 (0.751) |

0.513 |

0.567 (0.766) |

0.387 |

(0.715) |

| massive (59) |

0.43 |

0.428 |

0.543 |

0.512 |

0.52 (0.755) |

0.526 |

0.518 (0.789) |

0.414 |

(0.692) |

| wikitoxic_toxicaggreg (2) |

0.547 |

0.751 |

0.766 |

0.751 |

0.769 (0.904) |

0.741 |

0.787 (0.911) |

0.736 |

(0.9) |

| wikitoxic_obscene (2) |

0.713 |

0.817 |

0.854 |

0.853 |

0.869 (0.922) |

0.883 |

0.893 (0.933) |

0.783 |

(0.914) |

| wikitoxic_threat (2) |

0.295 |

0.71 |

0.817 |

0.813 |

0.87 (0.946) |

0.827 |

0.879 (0.952) |

0.68 |

(0.947) |

| wikitoxic_insult (2) |

0.372 |

0.724 |

0.798 |

0.759 |

0.811 (0.912) |

0.77 |

0.779 (0.924) |

0.783 |

(0.915) |

| wikitoxic_identityhate (2) |

0.473 |

0.774 |

0.798 |

0.774 |

0.765 (0.938) |

0.797 |

0.806 (0.948) |

0.761 |

(0.931) |

| hateoffensive (3) |

0.161 |

0.352 |

0.29 |

0.315 |

0.371 (0.862) |

0.47 |

0.461 (0.847) |

0.291 |

(0.823) |

| hatexplain (3) |

0.239 |

0.396 |

0.314 |

0.376 |

0.369 (0.765) |

0.378 |

0.389 (0.764) |

0.29 |

(0.729) |

| biasframes_offensive (2) |

0.336 |

0.571 |

0.583 |

0.544 |

0.601 (0.867) |

0.644 |

0.656 (0.883) |

0.541 |

(0.855) |

| biasframes_sex (2) |

0.263 |

0.617 |

0.835 |

0.741 |

0.809 (0.922) |

0.846 |

0.815 (0.946) |

0.748 |

(0.905) |

| biasframes_intent (2) |

0.616 |

0.531 |

0.635 |

0.554 |

0.61 (0.881) |

0.696 |

0.687 (0.891) |

0.467 |

(0.868) |

| agnews (4) |

0.703 |

0.758 |

0.745 |

0.68 |

0.742 (0.898) |

0.819 |

0.771 (0.898) |

0.687 |

(0.892) |

| yahootopics (10) |

0.299 |

0.543 |

0.62 |

0.578 |

0.564 (0.722) |

0.621 |

0.613 (0.738) |

0.587 |

(0.711) |

| trueteacher (2) |

... |

... |

... |

... |

... |

... |

... |

... |

... |

📄 License

This project is licensed under the MIT license.