Llama 1B Dj Refine 150B

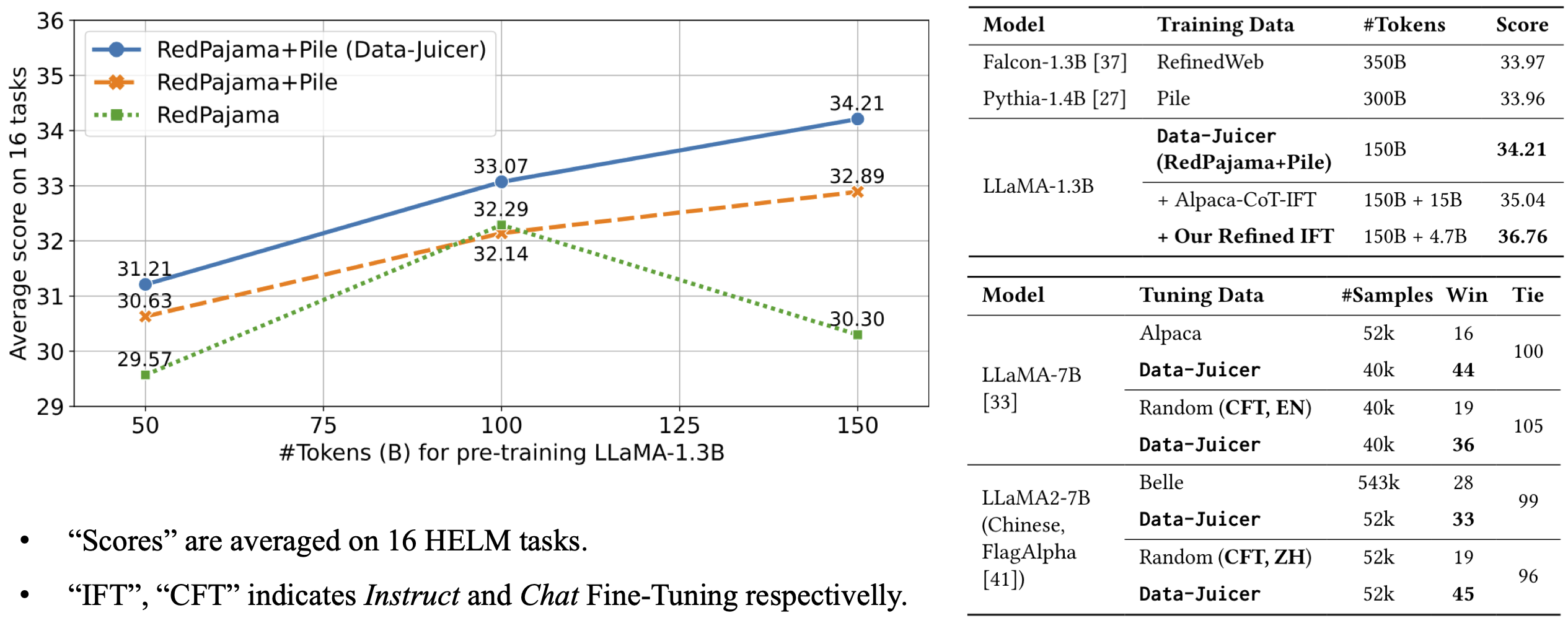

Based on the OpenLLaMA architecture, this large language model is pre-trained on Data-Juicer refined RedPajama and Pile datasets, outperforming other models of the same 1.3B parameter scale.

Downloads 2,834

Release Time : 10/30/2023

Model Overview

This model is a reference-level large language model released by Data-Juicer, adopting the LLaMA-1.3B architecture and trained on refined datasets, suitable for various natural language processing tasks.

Model Features

High-quality training data

Utilizes Data-Juicer refined RedPajama and Pile datasets, with superior data quality compared to the original datasets.

Efficient training

Achieves excellent performance with only 150 billion tokens trained, exhibiting higher training efficiency than similar models.

Superior performance

Scores an average of 34.21 on 16 HELM benchmark tests, surpassing comparable models like Falcon-1.3B and Pythia-1.4B.

Model Capabilities

Text generation

Language understanding

Knowledge Q&A

Text summarization

Use Cases

Research applications

Language model benchmarking

Used for evaluating and comparing the performance of different language models

Performs excellently in HELM benchmark tests

Commercial applications

Intelligent customer service

Used for building English intelligent customer service systems

Featured Recommended AI Models