🚀 WizardLM-2

We introduce and open-source WizardLM-2, the next generation of state-of-the-art large language models, excelling in complex chat, multilingual, reasoning, and agent tasks.

🚀 Quick Start

This README provides an overview of WizardLM-2, including news, model details, capacities, method overview, and usage instructions. For more information, please refer to the release blog post and the upcoming paper.

✨ Features

- Next Generation Models: WizardLM-2 represents a significant advancement in large language models, with improved performance in complex chat, multilingual, reasoning, and agent tasks.

- Three Cutting-Edge Models: The new family includes WizardLM-2 8x22B, WizardLM-2 70B, and WizardLM-2 7B, each with unique capabilities and performance characteristics.

- Highly Competitive Performance: WizardLM-2 8x22B demonstrates highly competitive performance compared to leading proprietary works and outperforms existing state-of-the-art open-source models.

- Top-Tier Reasoning Capabilities: WizardLM-2 70B reaches top-tier reasoning capabilities and is the first choice in the same size.

- Fast and Comparable Performance: WizardLM-2 7B is the fastest and achieves comparable performance with existing 10x larger open-source leading models.

📦 Installation

No specific installation steps are provided in the original README.

💻 Usage Examples

Basic Usage

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful,

detailed, and polite answers to the user's questions. USER: Hi ASSISTANT: Hello.</s>

USER: Who are you? ASSISTANT: I am WizardLM.</s>......

Advanced Usage

For more advanced usage, please refer to the WizardLM-2 inference demo code on our GitHub.

📚 Documentation

News 🔥🔥🔥 [2024/04/15]

We introduce and open-source WizardLM-2, our next generation state-of-the-art large language models, which have improved performance on complex chat, multilingual, reasoning, and agent tasks. The new family includes three cutting-edge models: WizardLM-2 8x22B, WizardLM-2 70B, and WizardLM-2 7B.

- WizardLM-2 8x22B is our most advanced model, demonstrating highly competitive performance compared to leading proprietary works and consistently outperforming all existing state-of-the-art open-source models.

- WizardLM-2 70B reaches top-tier reasoning capabilities and is the first choice in the same size.

- WizardLM-2 7B is the fastest and achieves comparable performance with existing 10x larger open-source leading models.

For more details of WizardLM-2, please read our release blog post and the upcoming paper.

Model Details

Model Capacities

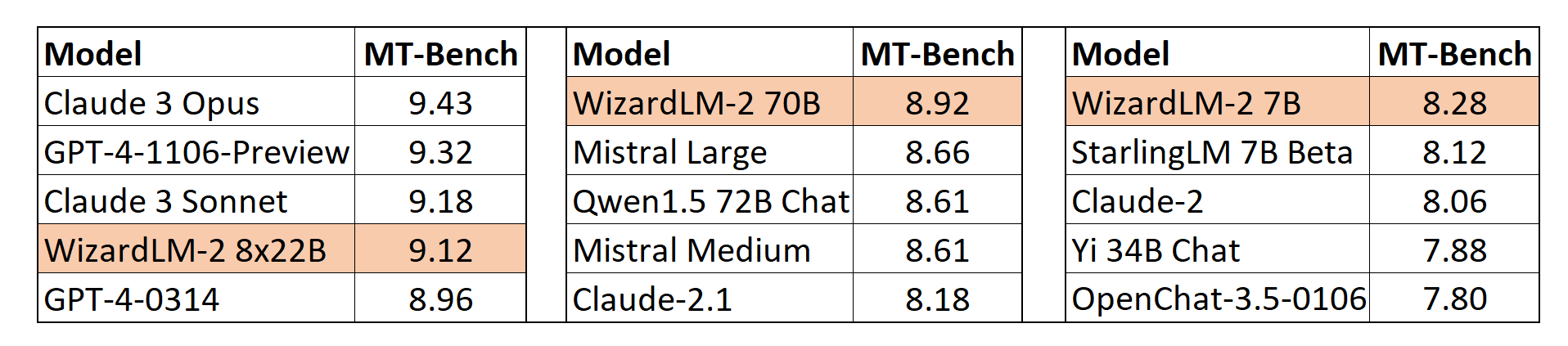

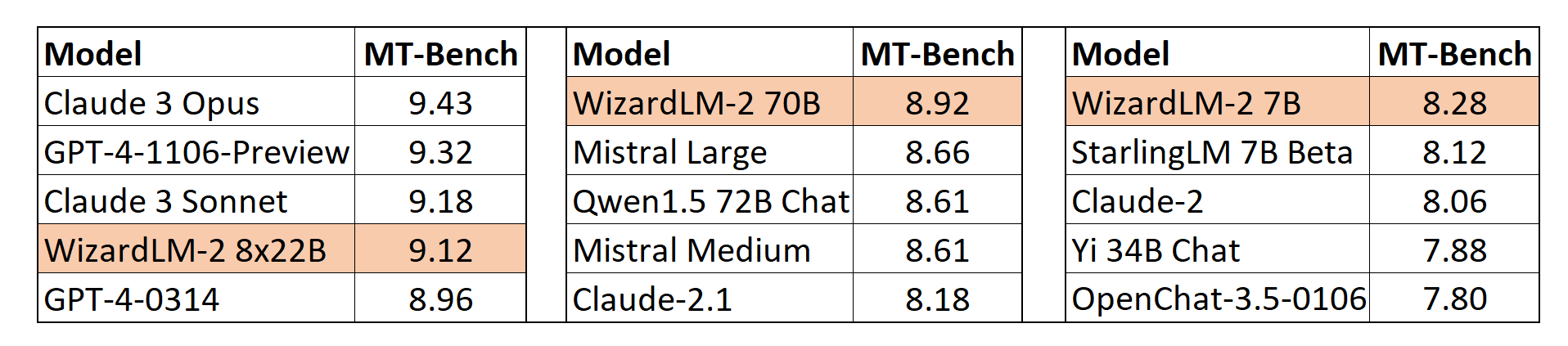

MT-Bench

We adopt the automatic MT-Bench evaluation framework based on GPT-4 proposed by lmsys to assess the performance of models. The WizardLM-2 8x22B demonstrates highly competitive performance compared to the most advanced proprietary models. Meanwhile, WizardLM-2 7B and WizardLM-2 70B are all top-performing models among the other leading baselines at 7B to 70B model scales.

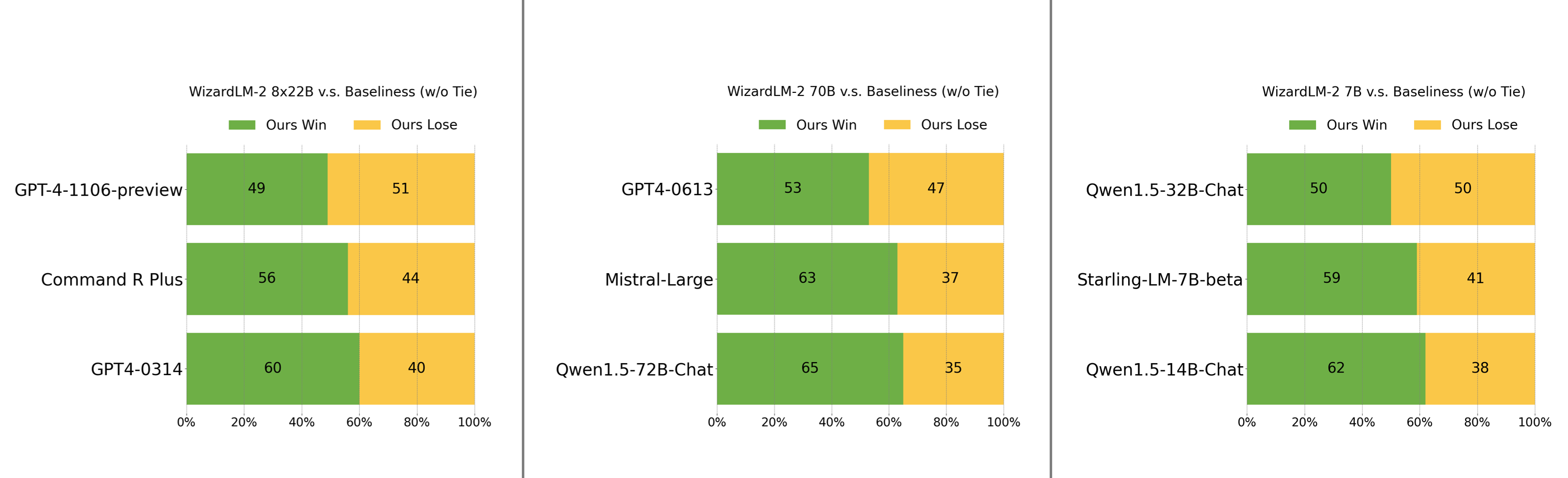

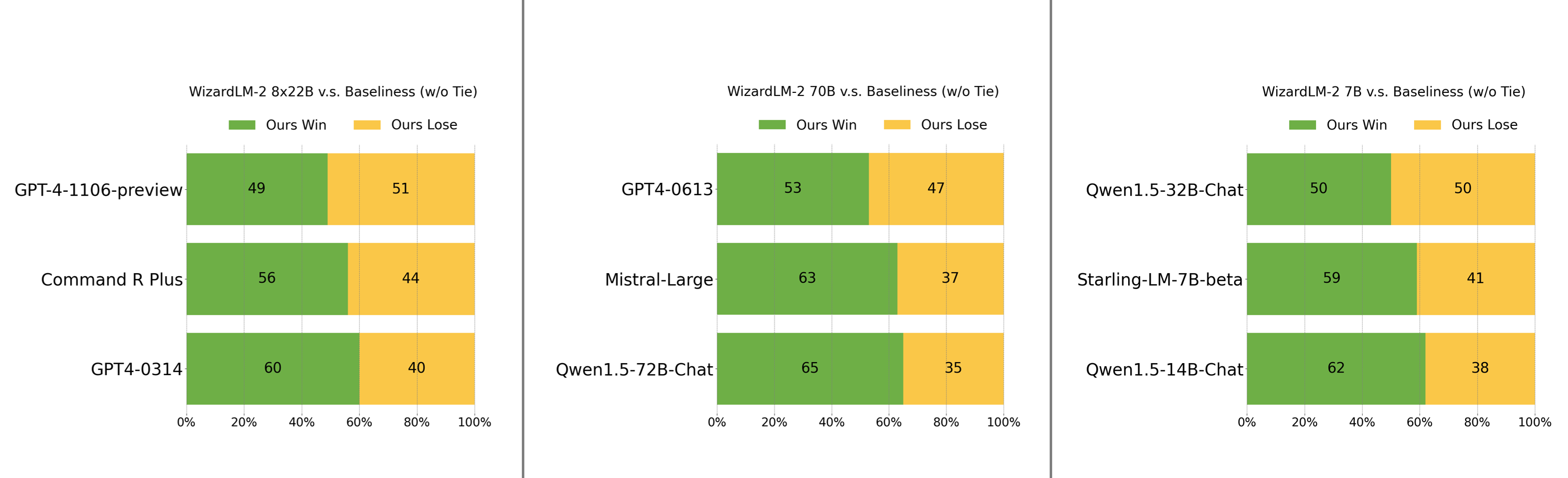

Human Preferences Evaluation

We carefully collected a complex and challenging set consisting of real-world instructions, which includes main requirements of humanity, such as writing, coding, math, reasoning, agent, and multilingual. We report the win:loss rate without tie:

- WizardLM-2 8x22B is just slightly falling behind GPT-4-1106-preview and significantly stronger than Command R Plus and GPT4-0314.

- WizardLM-2 70B is better than GPT4-0613, Mistral-Large, and Qwen1.5-72B-Chat.

- WizardLM-2 7B is comparable with Qwen1.5-32B-Chat and surpasses Qwen1.5-14B-Chat and Starling-LM-7B-beta.

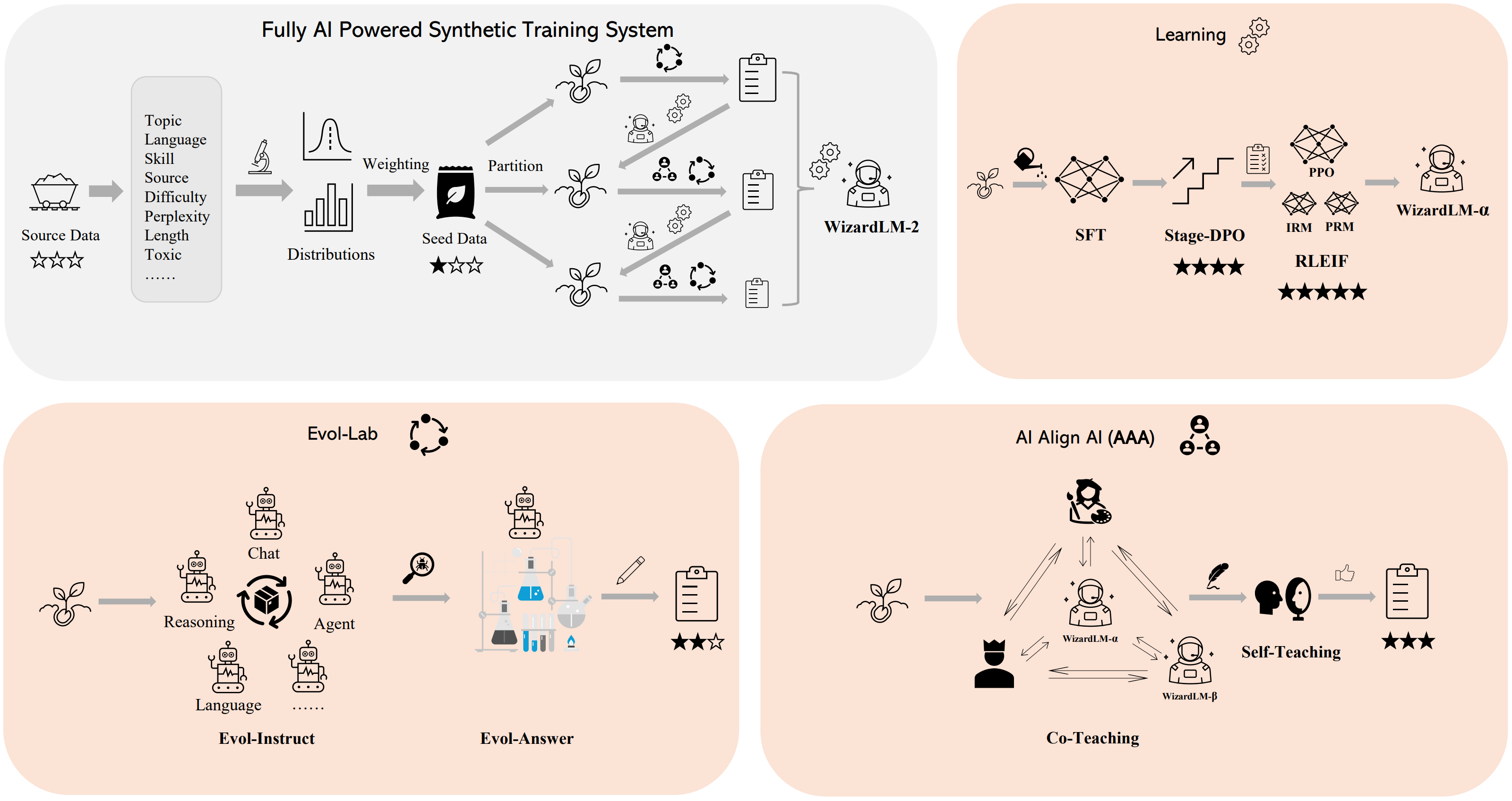

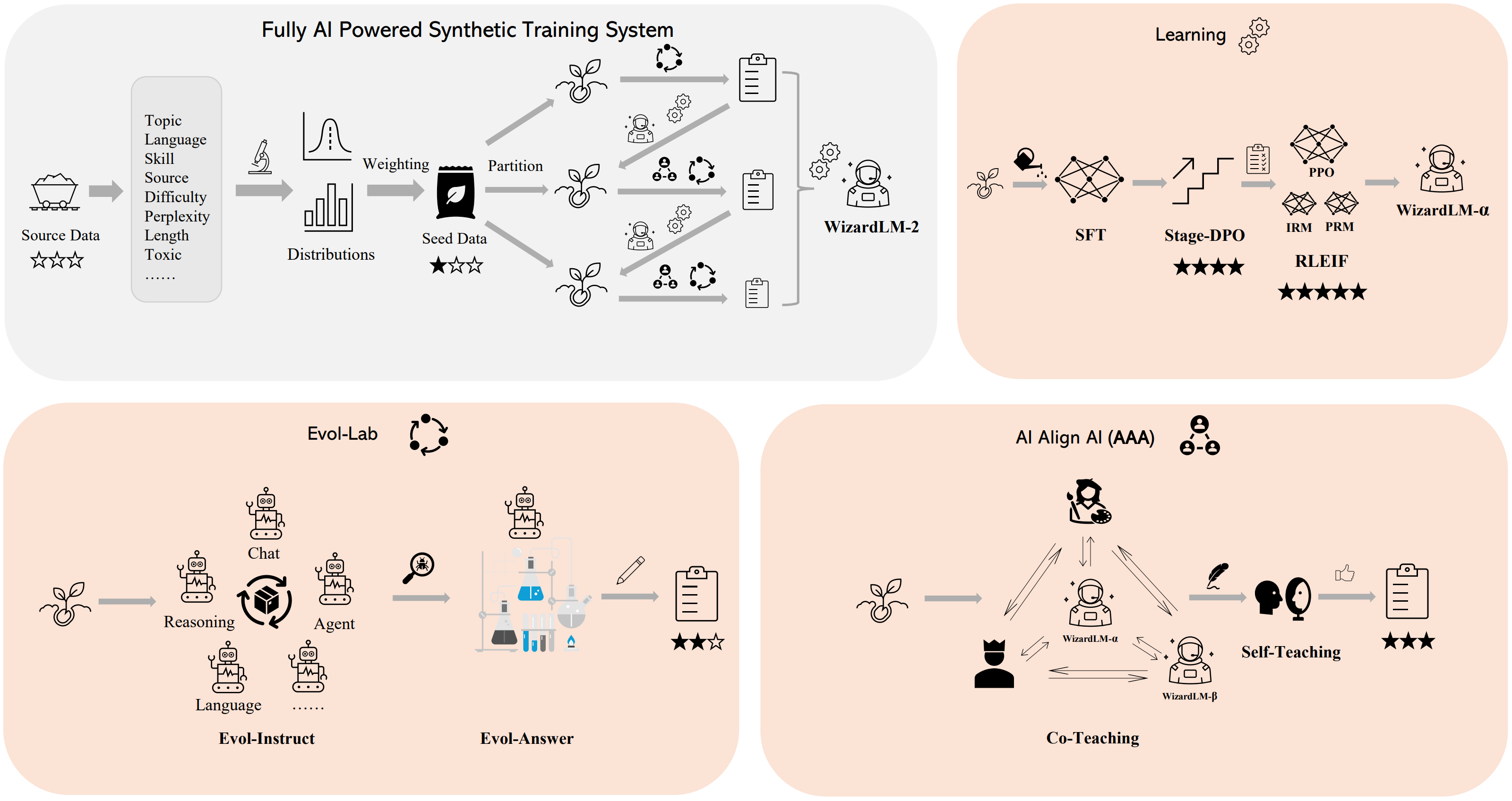

Method Overview

We built a fully AI powered synthetic training system to train WizardLM-2 models. For more details of this system, please refer to our blog.

Usage

⚠️ Important Note

Note for model system prompts usage: WizardLM-2 adopts the prompt format from Vicuna and supports multi-turn conversation. The prompt should be as follows:

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful,

detailed, and polite answers to the user's questions. USER: Hi ASSISTANT: Hello.</s>

USER: Who are you? ASSISTANT: I am WizardLM.</s>......

We provide a WizardLM-2 inference demo code on our GitHub.

📄 License

This project is licensed under the Apache 2.0 license.