🚀 Cat-llama3-instruct

Cat-llama3-instruct is a Llama 3 8B finetuned model that focuses on system prompt fidelity, helpfulness, and character engagement. It aims to strictly respect system prompts, offer useful information in all situations, and provide maximum character immersion in given scenarios.

📄 License

The model uses the Llama3 license.

✨ Features

- System Instruction Fidelity: The model highly respects system instructions, ensuring accurate and relevant responses.

- Chain of Thought (COT): It can perform COT to solve complex tasks and extend and enrich its answers.

- Character Immersion: It provides maximum character immersion in role - play scenarios.

- Helpfulness for Biosciences and General Science: It offers useful information in the fields of biosciences and general science.

📚 Documentation

About the Model

Cat-llama3-instruct is a Llama 3 8B finetuned model. It is presented by:

- Dataset Builder: Dr. Kal'tsit (Kat)

- Trainer/Funding: SteelSkull

- Facilitator: Potatooff

There is also a 70B variant of the model (Trained by Dr. Kal'tsit, Posted by Turboderp).

Methods

Dataset Preparation

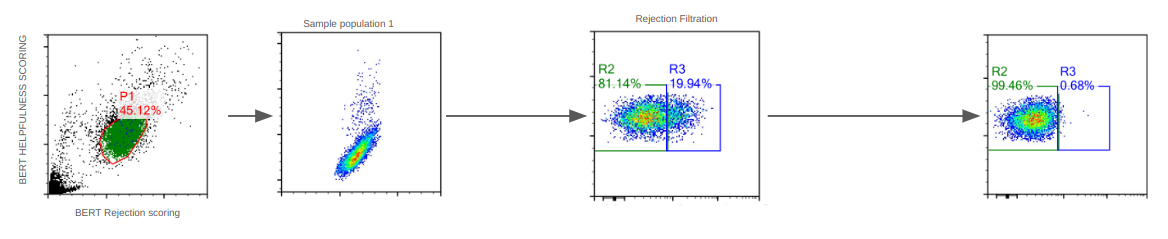

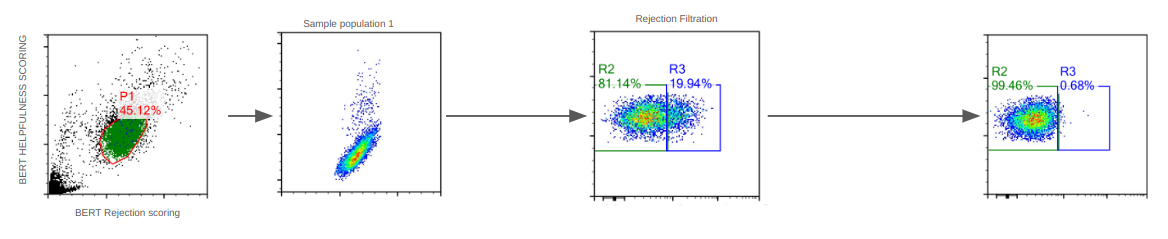

A Huggingface dataset containing instruction - response pairs was systematically pulled. A GPT model was trained on high - quality and helpful responses to serve as a standard model.

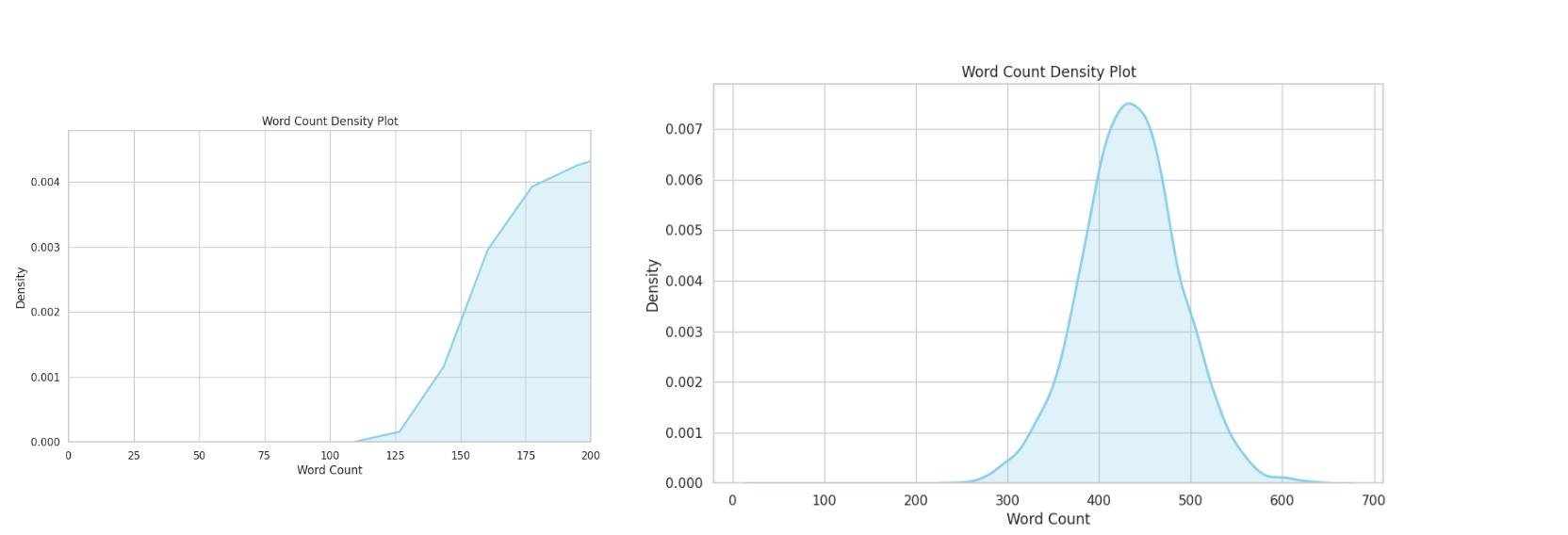

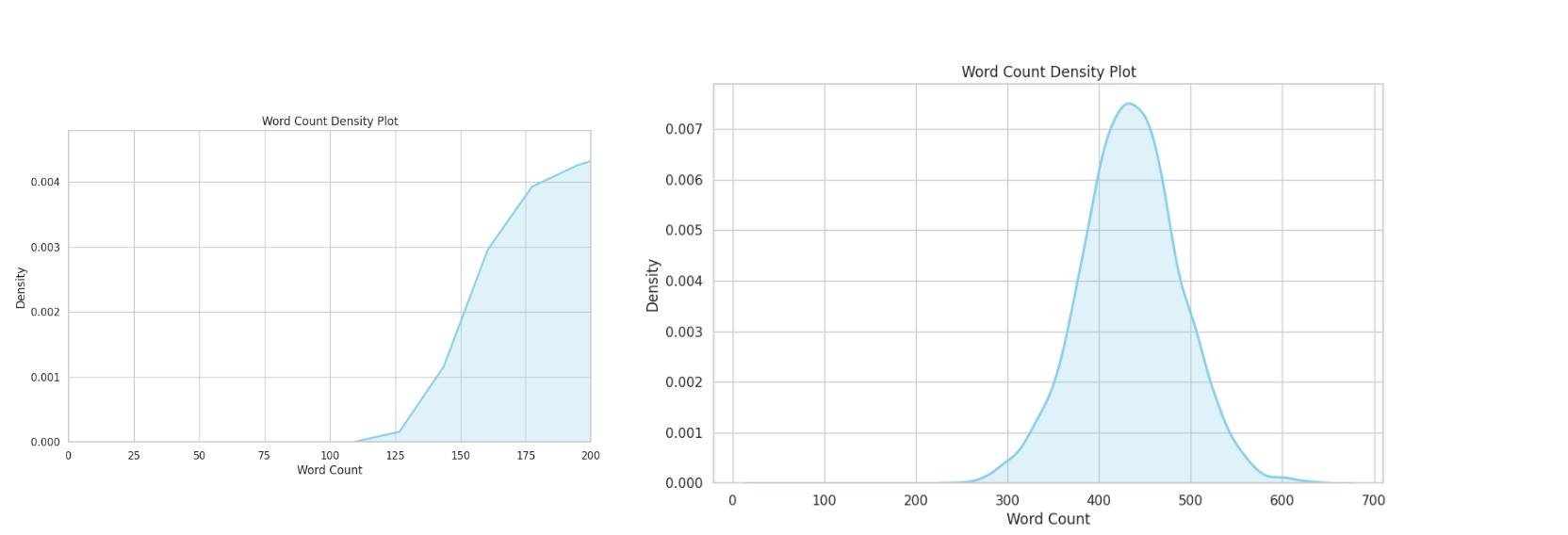

The dataset was further filtered for length and COT responses. Health - related data was also pulled from Chat Doctor, favoring detailed and step - by - step diagnosis.

(Fig1. Huggingface dataset population distribution and filtration for each component)

(Fig1. Huggingface dataset population distribution and filtration for each component)

(Fig 2. COT responses are all >50 tokens single turn)

(Fig 2. COT responses are all >50 tokens single turn)

(Fig 3. Health related tasks are all >100 tokens, peaking at 450 tokens single turn)

(Fig 3. Health related tasks are all >100 tokens, peaking at 450 tokens single turn)

Model Training

The model was trained using 1*a100 for 6 days, with 4 epochs.

Usage

Prompt format: llama 3

Quants

Showcases

The model performs COT in grey and gives calculated responses in black. Note that this behavior is achieved through system card instruction to showcase system card fidelity and is not fine - tuned into the model.

(Fig 4. Showcasing model doing COT to solve difficult tasks, extend and enrich its own answers)

(Fig 4. Showcasing model doing COT to solve difficult tasks, extend and enrich its own answers)

💻 Usage Examples

Basic Usage

<|begin_of_text|>Below is a conversation between an user and an AI assistant. The ai assistant gives response regarding user's questions. USER: user: Given the task definition and input, reply with output. In this task, you are given a question and an answer, you would be asked to create the sentence based on the Question-Answer provided. It should be contained within the Question-Answer provided.

Question: What type of diseases in humans are caused by protozoa? Answer: protist diseases

ASSISTANT: AI: Task Explanation:

In this task, you are asked to create a sentence using the given question and the provided answer. The sentence you create should include the information from both the question and the answer in a concise and coherent manner.

Guidelines:

1. Read the given question and answer carefully.

2. Combine the information from the question and answer to create a new sentence.

3. Make sure your new sentence contains both the question's information and the answer provided.

4. Check your sentence for proper grammar and punctuation.

Steps to find the answer:

1. Read the question: "What type of diseases in humans are caused by protozoa?"

2. Read the answer: "protist diseases"

3. Combine the information from the question and answer to create a new sentence: "In humans, protist diseases are caused by protozoa."

4. Double-check the sentence for proper grammar and punctuation, and make necessary adjustments if needed.

Final Output:

In humans, protist diseases are caused by protozoa.< / s ><|end_of_text|>

📊 Evaluation Results

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Property |

Details |

| Avg. |

64.74 |

| AI2 Reasoning Challenge (25 - Shot) |

59.04 |

| HellaSwag (10 - Shot) |

79.20 |

| MMLU (5 - Shot) |

62.99 |

| TruthfulQA (0 - shot) |

50.80 |

| Winogrande (5 - shot) |

75.93 |

| GSM8k (5 - shot) |

60.50 |

(Fig1. Huggingface dataset population distribution and filtration for each component)

(Fig1. Huggingface dataset population distribution and filtration for each component) (Fig 2. COT responses are all >50 tokens single turn)

(Fig 2. COT responses are all >50 tokens single turn) (Fig 3. Health related tasks are all >100 tokens, peaking at 450 tokens single turn)

(Fig 3. Health related tasks are all >100 tokens, peaking at 450 tokens single turn)

(Fig 4. Showcasing model doing COT to solve difficult tasks, extend and enrich its own answers)

(Fig 4. Showcasing model doing COT to solve difficult tasks, extend and enrich its own answers)