🚀 Llama-3-Taiwan-70B

Llama-3-Taiwan-70B is a 70B parameter model finetuned on a large corpus of Traditional Mandarin and English data, demonstrating state - of - the - art performance on various Traditional Mandarin NLP benchmarks.

🚀 Quick Start

✨ Features

Llama-3-Taiwan-70B is a large language model finetuned for Traditional Mandarin and English users. It has strong capabilities in language understanding, generation, reasoning, and multi - turn dialogue. Key features include:

- 70B parameters

- Languages: Traditional Mandarin (zh - tw), English (en)

- Finetuned on High - quality Traditional Mandarin and English corpus covering general knowledge as well as industrial knowledge in legal, manufacturing, medical, and electronics domains

- 8K context length

- Open model released under the Llama - 3 license

📦 Installation

No installation steps are provided in the original document.

💻 Usage Examples

Basic Usage

📚 Documentation

Model Summary

Llama-3-Taiwan-70B is a large language model finetuned for Traditional Mandarin and English users. It has strong capabilities in language understanding, generation, reasoning, and multi - turn dialogue. Key features include:

- 70B parameters

- Languages: Traditional Mandarin (zh - tw), English (en)

- Finetuned on High - quality Traditional Mandarin and English corpus covering general knowledge as well as industrial knowledge in legal, manufacturing, medical, and electronics domains

- 8K context length

- Open model released under the Llama - 3 license

Training Details

Evaluation

Checkout Open TW LLM Leaderboard for full and updated list.

Numbers are 0 - shot by default.

Eval implementation

^ taken the closet matching numbers from original dataset.

Needle in a Haystack Evaluation

The "Needle in a 《出師表》" evaluation tests the model's ability to locate and recall important information embedded within a large body of text, using the classic Chinese text 《出師表》 by 諸葛亮.

To run the evaluation, use the script.

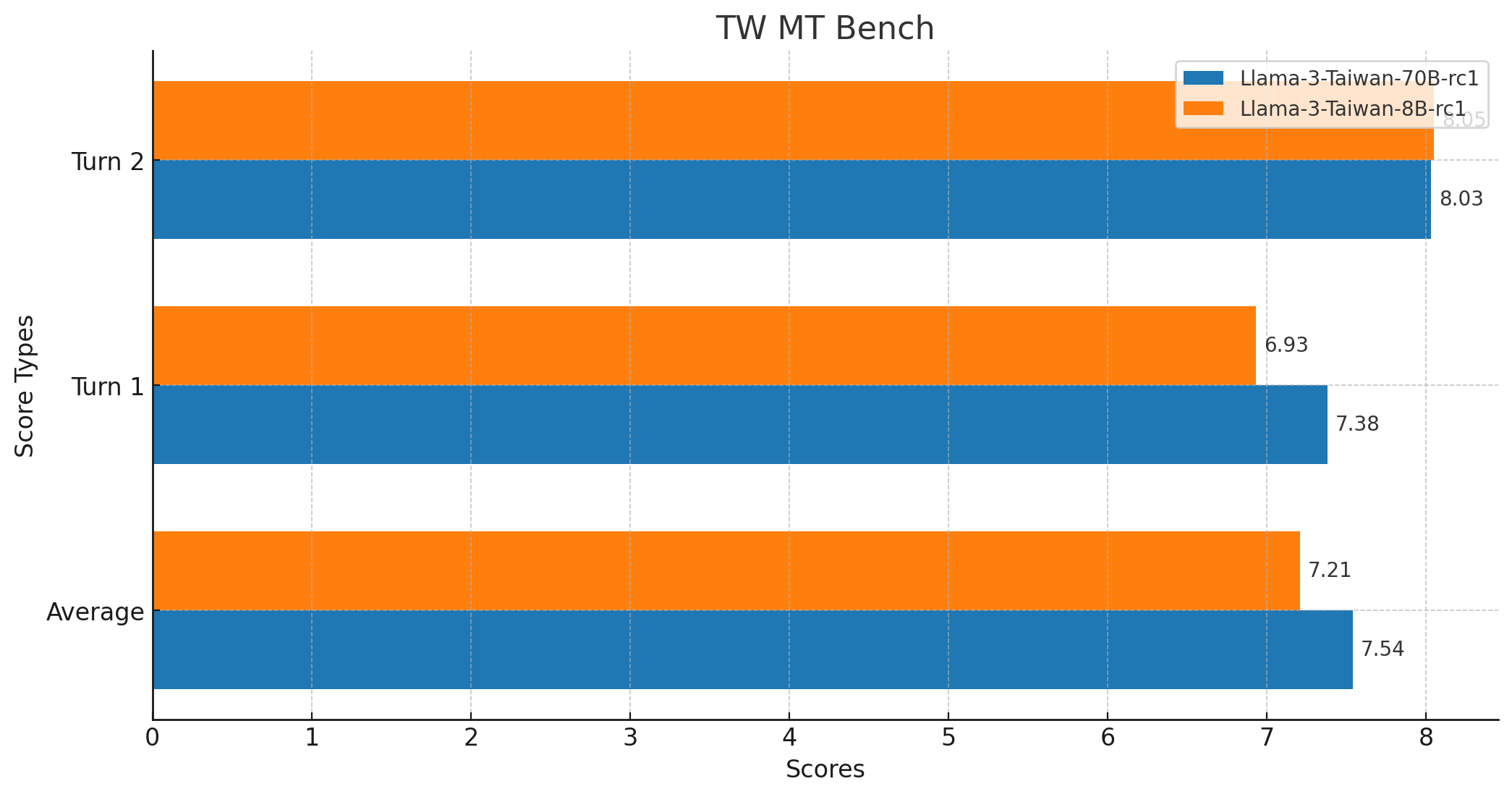

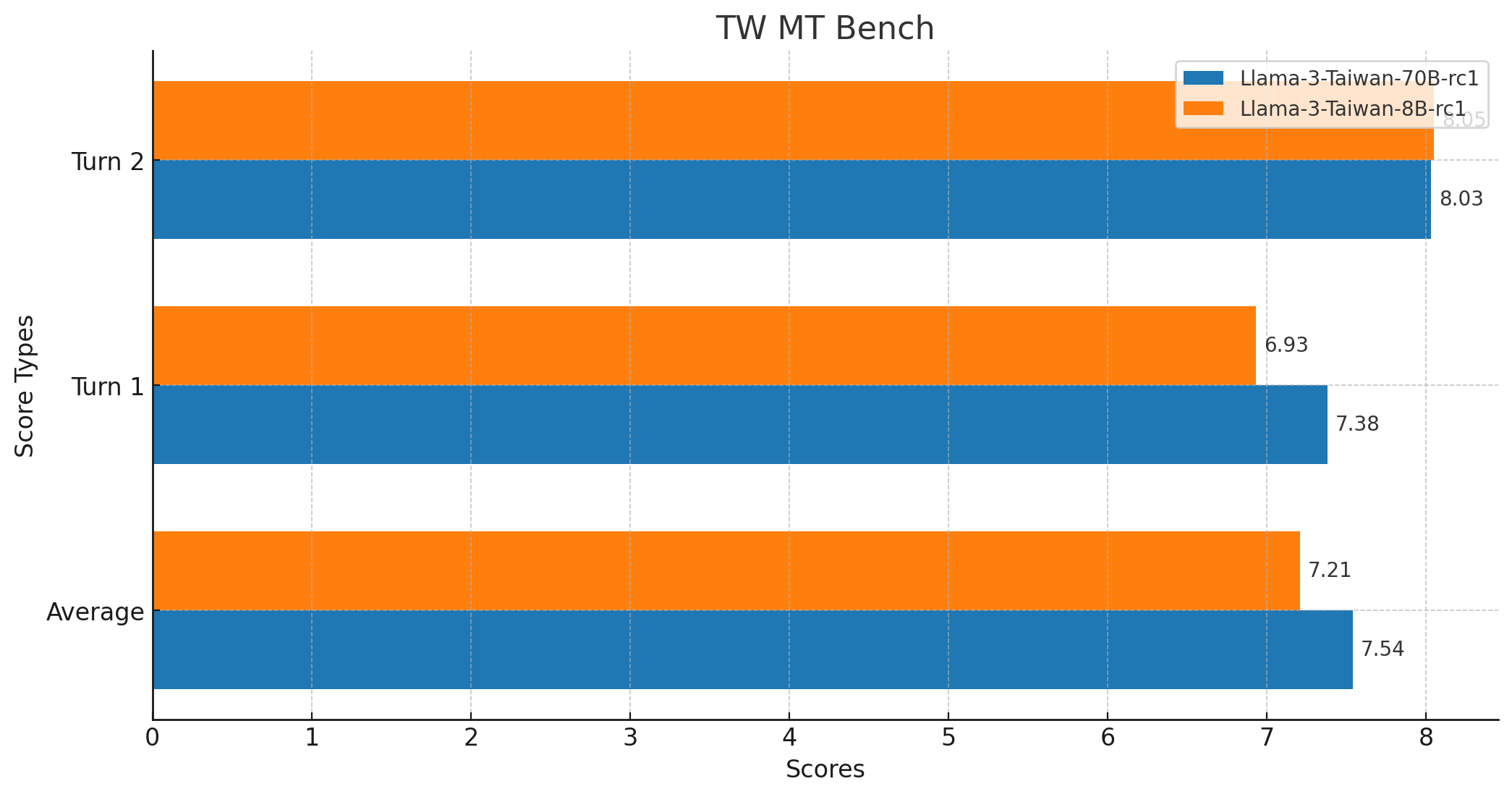

TW MT - Bench Score

Use Cases

Llama-3-Taiwan-70B can be applied to a wide variety of NLP tasks in Traditional Chinese and English, including:

1. Multi - turn Dialogue

System: You are an AI assistant called Twllm, created by TAME (TAiwan Mixture of Expert) project.

User: Hi, hello!

Assistant: Hello! How can I assist you today?

User: Just want to chat...

Assistant: Of course, I'd be happy to chat with you! Is there anything in particular you'd like to talk about?

2. RAG (Retrieval - Augmented Generation)

Demo: You can open Search Web on twllm.com

System: You are an AI assistant called Twllm, created by TAME (TAiwan Mixture of Expert) project.

User: What's the weather like at NTU tomorrow?

Assistant: According to the weather forecast, the weather at NTU tomorrow will be showers. The low temperature will be 24°C. There will be an east wind of 10 to 15 km/h. The probability of rain is 40%.

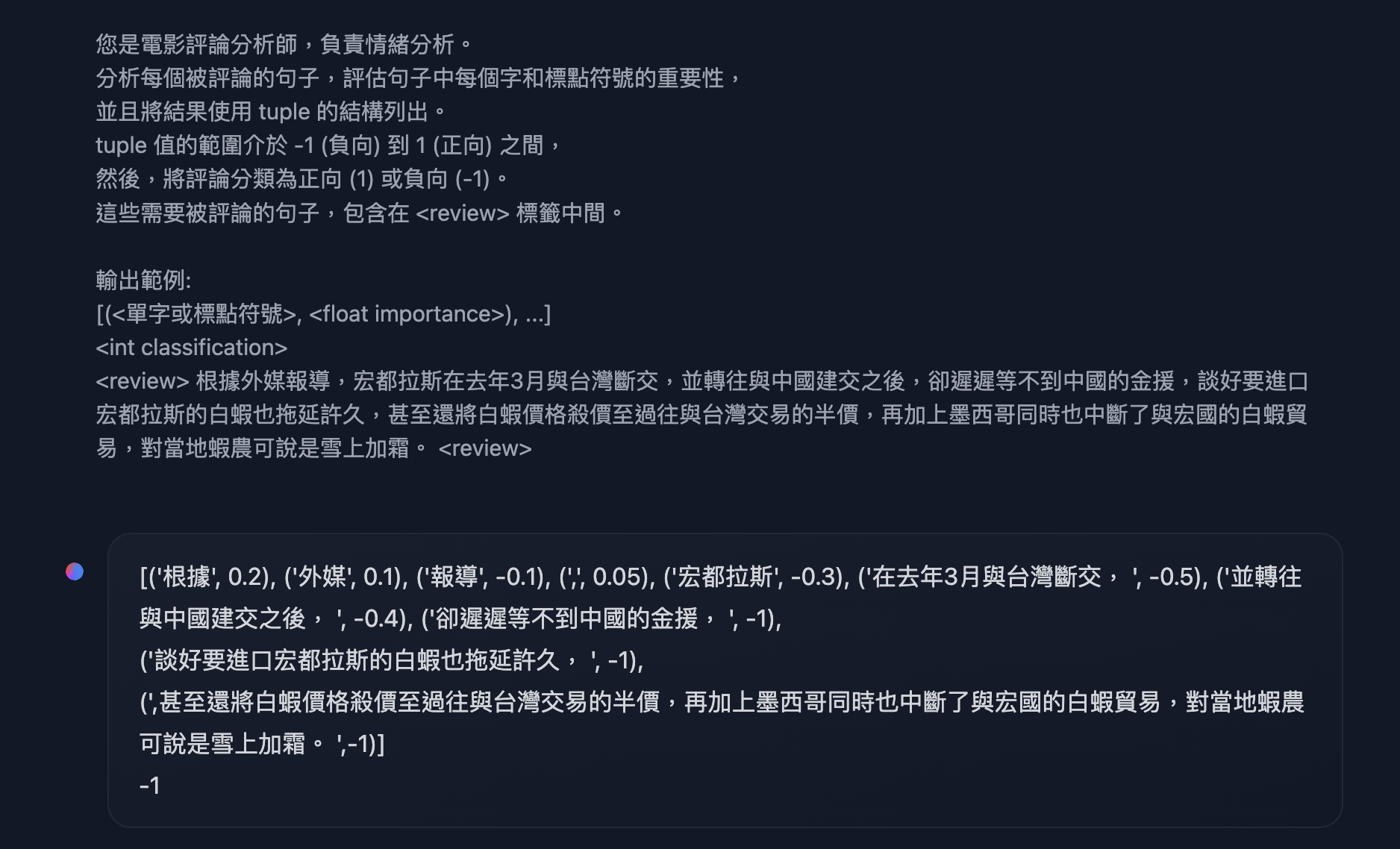

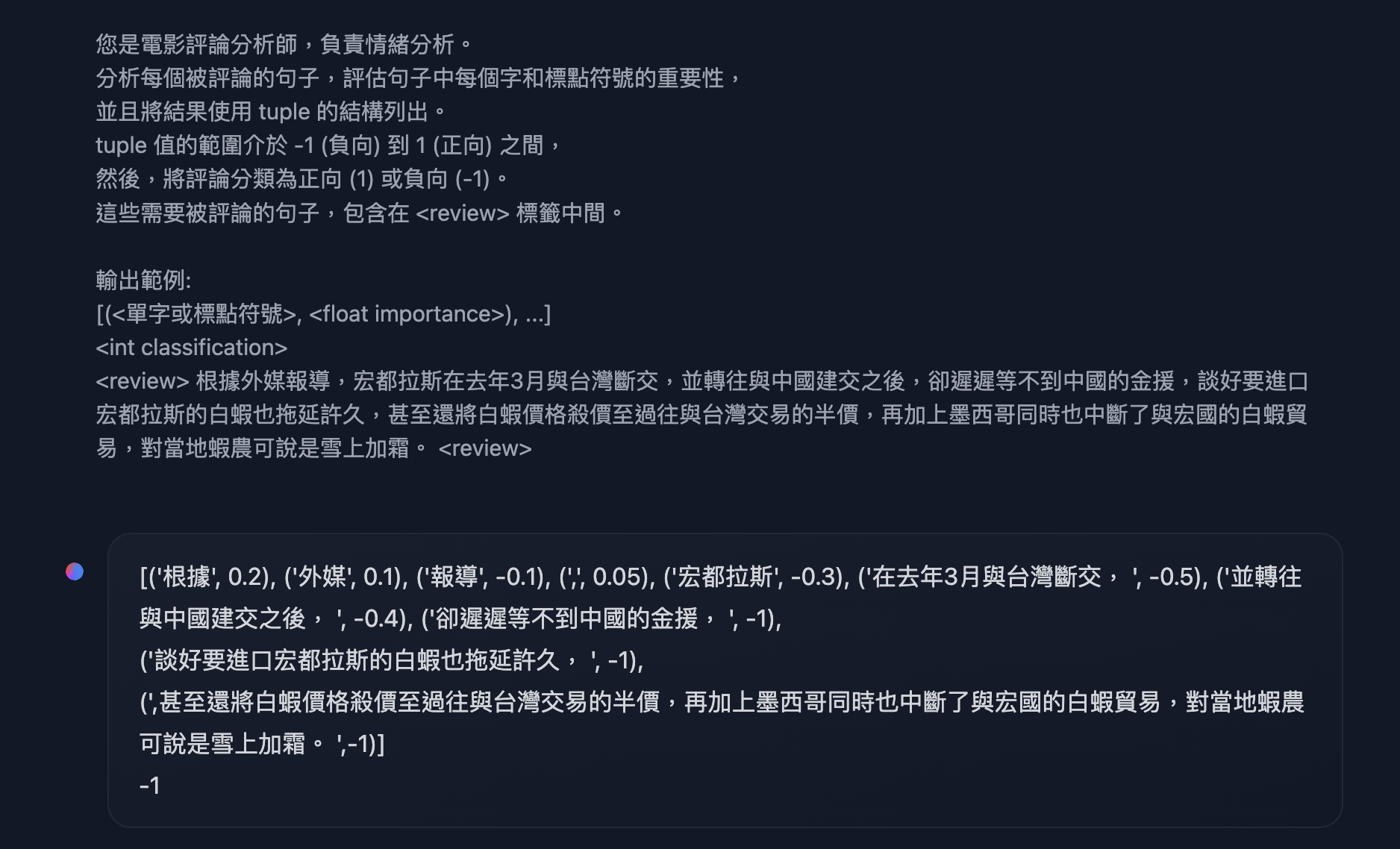

3. Formatted Output, Language Understanding, Entity Recognition, Function Calling

If you are interested in function - calling, I strongly recommend using constrained decoding to turn on json mode.

Example from HW7 in INTRODUCTION TO GENERATIVE AI 2024 SPRING from HUNG - YI LEE (李宏毅)

System: You are an AI assistant called Twllm, created by TAME (TAiwan Mixture of Expert) project.

🔧 Technical Details

The model was trained with NVIDIA NeMo™ Framework using the NVIDIA Taipei - 1 built with NVIDIA DGX H100 systems.

The compute and data for training Llama-3-Taiwan-70B was generously sponsored by Chang Gung Memorial Hospital, Chang Chun Group, Legalsign.ai, NVIDIA, Pegatron, TechOrange, and Unimicron (in alphabetical order).

We would like to acknowledge the contributions of our data provider, team members and advisors in the development of this model, including shasha77 for high - quality YouTube scripts and study materials, Taiwan AI Labs for providing local media content, Ubitus K.K. for offering gaming content, Professor Yun - Nung (Vivian) Chen for her guidance and advisement, Wei - Lin Chen for leading our pretraining data pipeline, Tzu - Han Lin for synthetic data generation, Chang - Sheng Kao for enhancing our synthetic data quality, and Kang - Chieh Chen for cleaning instruction - following data.

📄 License

The model is released under the Llama - 3 license.