Model Overview

Model Features

Model Capabilities

Use Cases

🚀 Whisper-Large-V3-French-Distil-Dec16

Whisper-Large-V3-French-Distil-Dec16 is a distilled version of the Whisper-Large-V3-French model. It reduces memory usage and inference time while maintaining performance, and can be used in various libraries.

🚀 Quick Start

This model is a series of distilled versions of Whisper-Large-V3-French. By reducing the number of decoder layers from 32 to 16, 8, 4, or 2 and distilling with a large - scale dataset (as detailed in this paper), it achieves better performance in terms of memory and speed.

The distilled variants reduce memory usage and inference time, maintain performance based on the number of retained layers, and mitigate the risk of hallucinations, especially in long - form transcriptions. They can also be combined with the original Whisper - Large - V3 - French model for speculative decoding, improving inference speed and output consistency.

This model has been converted into various formats for use in different libraries, such as transformers, openai - whisper, fasterwhisper, whisper.cpp, candle, mlx, etc.

✨ Features

- Performance Optimization: Reduces memory usage and inference time while maintaining performance and reducing hallucination risks.

- Multiple Formats: Converted into various formats for compatibility with different libraries.

- Speculative Decoding: Can be combined with the original model for faster and more consistent inference.

📚 Documentation

Table of Contents

Performance

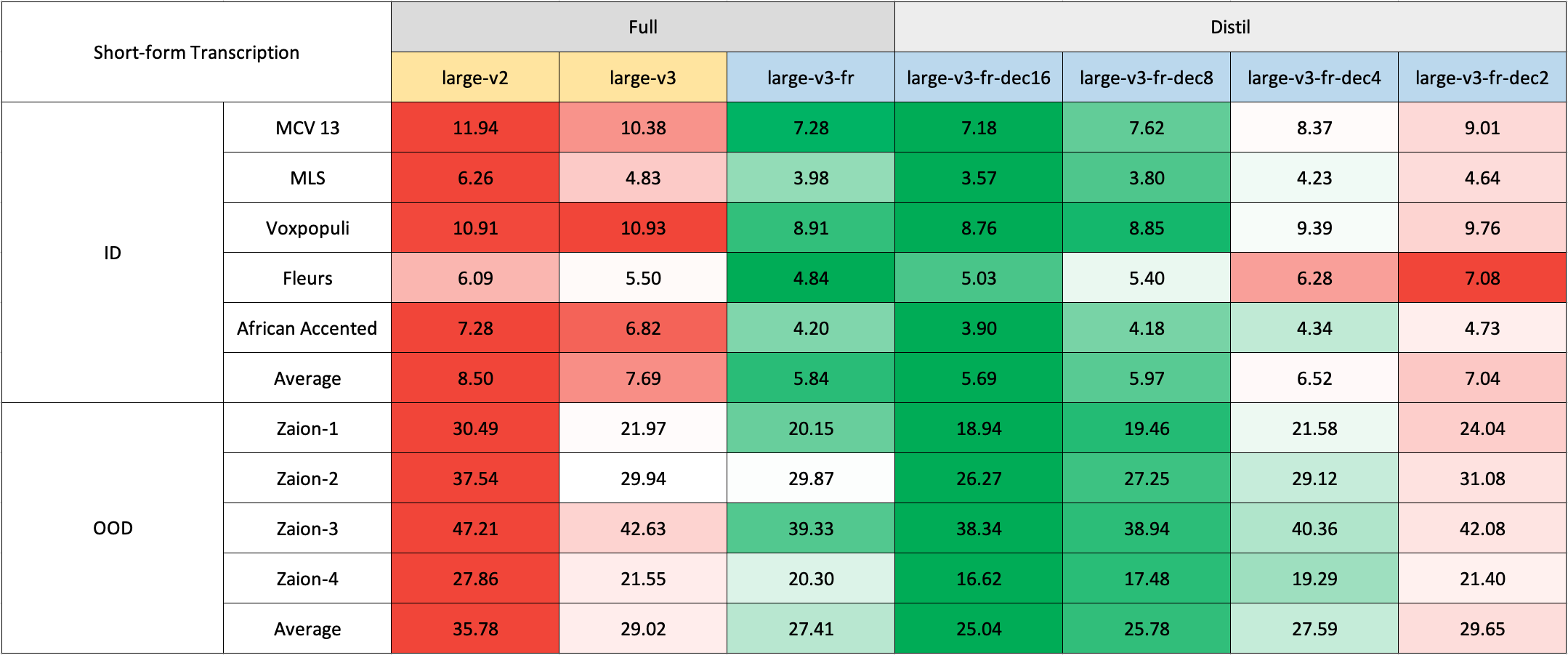

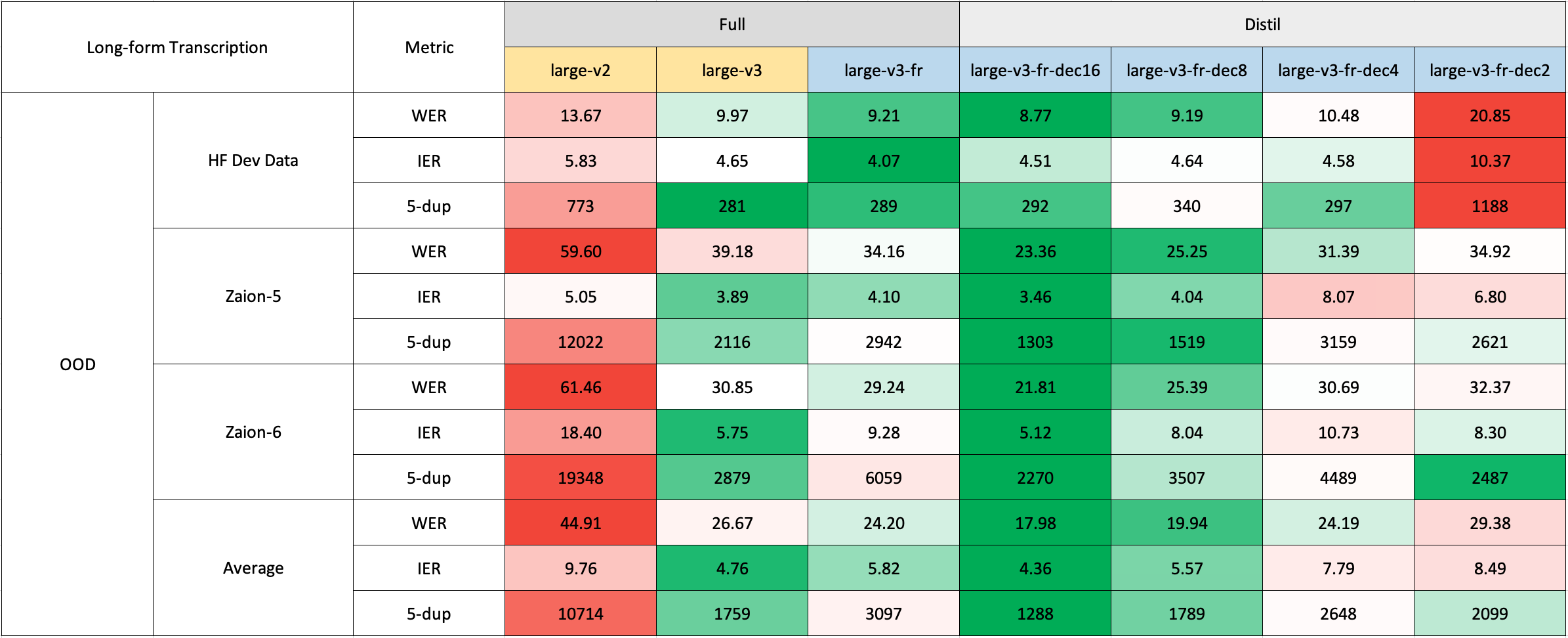

We evaluated our model on short and long - form transcriptions, and on both in - distribution and out - of - distribution datasets for a comprehensive analysis of its accuracy, generalizability, and robustness.

Note that the reported WER is the result after converting numbers to text, removing punctuation (except for apostrophes and hyphens), and converting all characters to lowercase.

All evaluation results on public datasets can be found here.

Short - Form Transcription

Due to the lack of readily available out - of - domain (OOD) and long - form French test sets, we used internal test sets from Zaion Lab. These sets consist of human - annotated audio - transcription pairs from call center conversations, with significant background noise and domain - specific terminology.

Long - Form Transcription

The long - form transcription was run using the 🤗 Hugging Face pipeline for quicker evaluation. Audio files were segmented into 30 - second chunks and processed in parallel.

Usage

Hugging Face Pipeline

The model can be easily used with the 🤗 Hugging Face pipeline class for audio transcription.

For long - form transcription (> 30 seconds), you can activate the process by passing the chunk_length_s argument. This approach segments the audio into smaller segments, processes them in parallel, and then joins them at the strides by finding the longest common sequence. While this chunked long - form approach may have a slight performance compromise compared to OpenAI's sequential algorithm, it provides 9x faster inference speed.

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec16"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

# chunk_length_s=30, # for long-form transcription

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

Hugging Face Low - level APIs

You can also use the 🤗 Hugging Face low - level APIs for transcription, offering more control over the process, as shown below:

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec16"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Extract feautres

input_features = processor(

sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt"

).input_features

# Generate tokens

predicted_ids = model.generate(

input_features.to(dtype=torch_dtype).to(device), max_new_tokens=128

)

# Detokenize to text

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True)[0]

print(transcription)

Speculative Decoding

Speculative decoding can be achieved using a draft model (a distilled version of Whisper). This approach guarantees the same output as using the main Whisper model alone, offers 2x faster inference speed, and only incurs a slight increase in memory overhead.

Since the distilled Whisper has the same encoder as the original, only its decoder needs to be loaded, and encoder outputs are shared between the main and draft models during inference.

Using speculative decoding with the Hugging Face pipeline is simple - just specify the assistant_model within the generation configurations.

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM,

AutoModelForSpeechSeq2Seq,

AutoProcessor,

pipeline,

)

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-french"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Load draft model

assistant_model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec2"

assistant_model = AutoModelForCausalLM.from_pretrained(

assistant_model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

assistant_model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

generate_kwargs={"assistant_model": assistant_model},

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

OpenAI Whisper

You can use the sequential long - form decoding algorithm with a sliding window and temperature fallback, as described by OpenAI in their original paper.

First, install the openai - whisper package:

pip install -U openai-whisper

Then, download the converted model:

python -c "from huggingface_hub import hf_hub_download; hf_hub_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec16', filename='original_model.pt', local_dir='./models/whisper-large-v3-french-distil-dec16')"

Now, you can transcribe audio files according to the usage instructions in the repository:

import whisper

from datasets import load_dataset

# Load model

model = whisper.load_model("./models/whisper-large-v3-french-distil-dec16/original_model.pt")

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

# Transcribe

result = model.transcribe(sample, language="fr")

print(result["text"])

Faster Whisper

Faster Whisper is a re - implementation of OpenAI's Whisper models and the sequential long - form decoding algorithm in the CTranslate2 format.

Compared to openai - whisper, it offers up to 4x faster inference speed and consumes less memory. The model can also be quantized into int8 for better efficiency on both CPU and GPU.

First, install the faster - whisper package:

pip install faster-whisper

Then, download the model converted to the CTranslate2 format:

python -c "from huggingface_hub import snapshot_download; snapshot_download(repo_id='bofenghuang/whisper-large-v3-french-distil-dec16', local_dir='./models/whisper-large-v3-french-distil-dec16', allow_patterns='ctranslate2/*')"

Now, you can transcribe audio files according to the usage instructions in the repository:

from datasets import load_dataset

from faster_whisper import WhisperModel

# Load model

model = WhisperModel("./models/whisper-large-v3-french-distil-dec16/ctranslate2", device="cuda", compute_type="float16") # Run on GPU with FP16

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

segments, info = model.transcribe(sample, beam_size=5, language="fr")

for segment in segments:

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

Whisper.cpp

Whisper.cpp is a re - implementation of OpenAI's Whisper models in plain C/C++ without any dependencies. It is compatible with various backends and platforms.

The model can be quantized to either 4 - bit or 5 - bit integers for better efficiency.

First, clone and build the whisper.cpp repository:

git clone https://github.com/ggerganov/whisper.cpp.git

cd whisper.cpp

Training details

The model was trained by distilling the Whisper - Large - V3 - French model, reducing the number of decoder layers and using a large - scale dataset as described in the paper.

Acknowledgements

We would like to thank all the contributors to the related projects, including but not limited to the developers of the original Whisper model, the datasets used for training and evaluation, and the open - source libraries that made this work possible.

📄 License

This project is licensed under the MIT license.

Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers Supports Multiple Languages

Transformers Supports Multiple Languages