🚀 M-CTC-T

A massively multilingual speech recognizer from Meta AI. This model is a powerful tool for speech recognition across multiple languages, offering high - performance capabilities.

✨ Features

- Multilingual Support: Capable of recognizing speech in multiple languages.

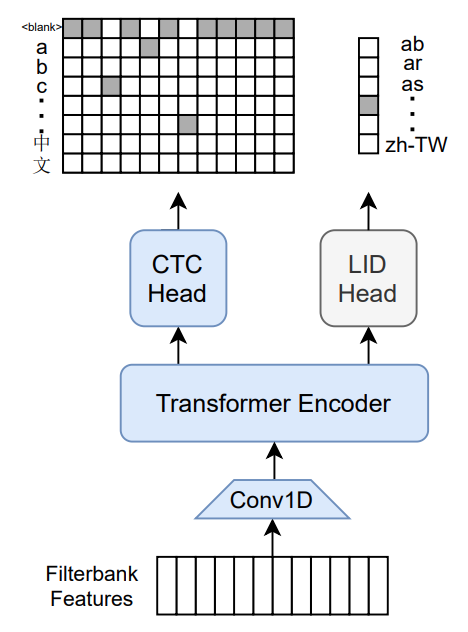

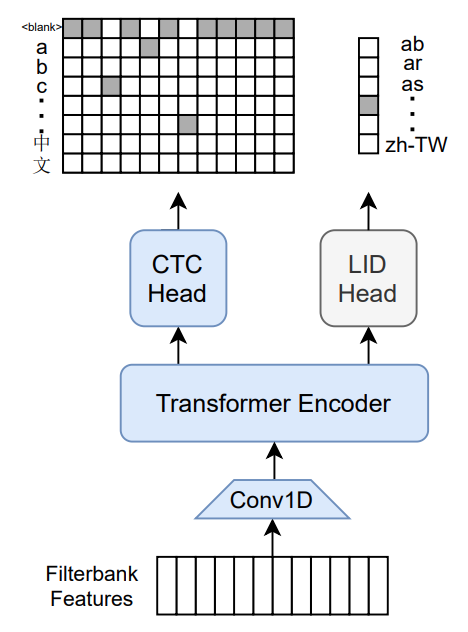

- Model Architecture: It is a 1B - param transformer encoder, equipped with a CTC head over 8065 character labels and a language identification head over 60 language ID labels.

- Training Data: Trained on datasets like Common Voice (version 6.1, December 2020 release), VoxPopuli, and later only on Common Voice. The labels are unnormalized character - level transcripts.

- Input Requirement: Takes Mel filterbank features from a 16Khz audio signal as input.

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl.

📦 Installation

No specific installation steps are provided in the original document, so this section is skipped.

💻 Usage Examples

Basic Usage

import torch

import torchaudio

from datasets import load_dataset

from transformers import MCTCTForCTC, MCTCTProcessor

model = MCTCTForCTC.from_pretrained("speechbrain/mctct-large")

processor = MCTCTProcessor.from_pretrained("speechbrain/mctct-large")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

input_features = processor(ds[0]["audio"]["array"], return_tensors="pt").input_features

logits = model(input_features).logits

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

Advanced Usage

No advanced usage code example is provided in the original document, so this part is skipped.

Results for Common Voice, averaged over all languages:

Character error rate (CER):

📚 Documentation

For more information on how the model was trained, please take a look at the official paper.

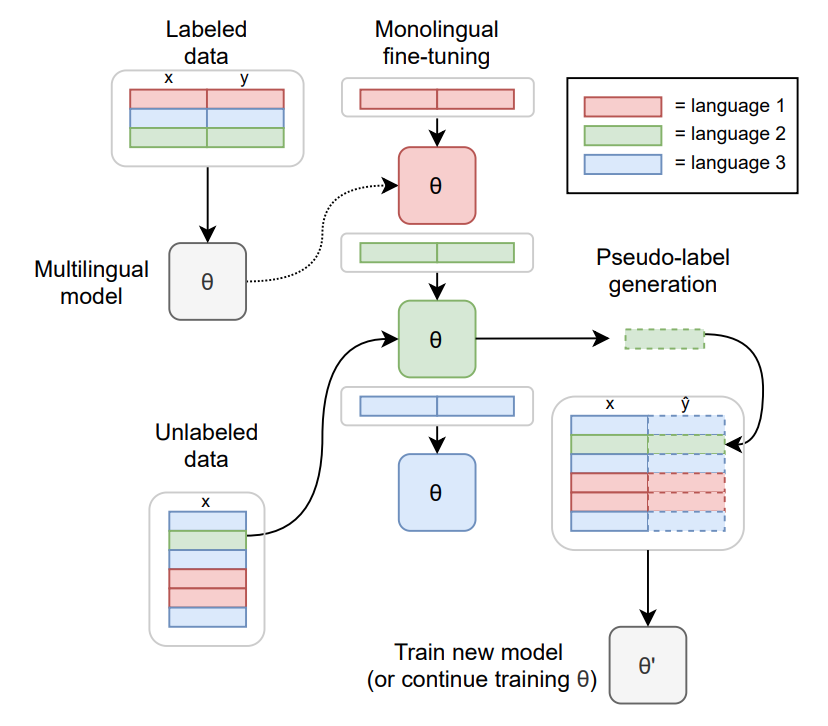

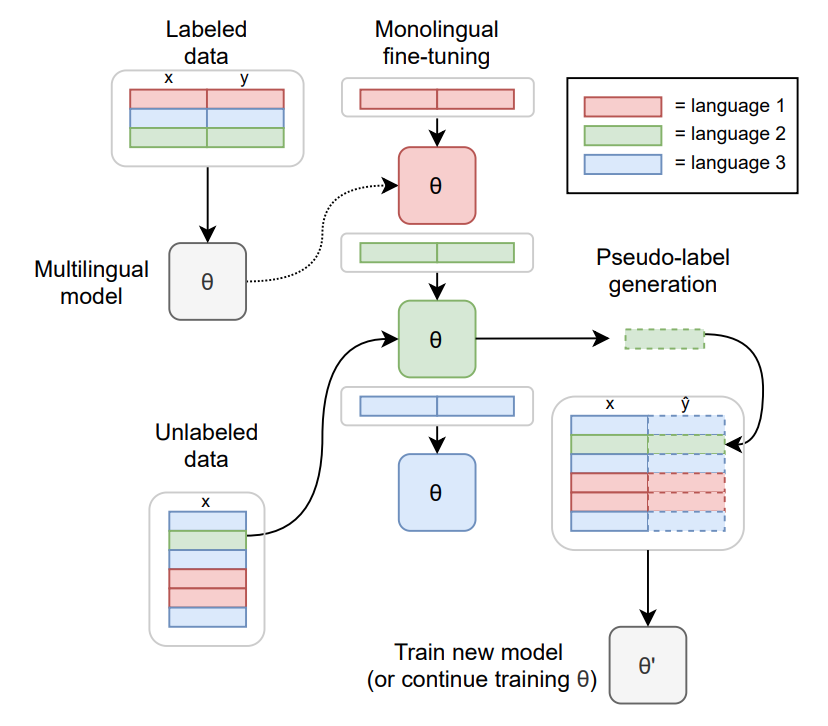

TO - DO: replace with the training diagram from paper

TO - DO: replace with the training diagram from paper

🔧 Technical Details

The model is a 1B - param transformer encoder, with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on specific datasets and takes Mel filterbank features from a 16Khz audio signal as input.

📄 License

This project is licensed under the apache - 2.0 license.

📄 Citation

Paper

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

@article{lugosch2021pseudo,

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

journal={ICASSP},

year={2022}

}

Additional thanks to Chan Woo Kim and Patrick von Platen for porting the model from Flashlight to PyTorch.

| Property |

Details |

| Model Type |

A 1B - param transformer encoder with CTC and language identification heads |

| Training Data |

Common Voice (version 6.1, December 2020 release), VoxPopuli, and later only Common Voice |

TO - DO: replace with the training diagram from paper

TO - DO: replace with the training diagram from paper