🚀 BERTugues Base (aka "BERTugues-base-portuguese-cased")

BERTugues Base is a pre - trained model for Portuguese, aiming at tasks like Masked Language Modeling and Next Sentence Prediction, with performance improvements in multiple tasks compared to other models.

🚀 Quick Start

BERTugues was pre - trained following the steps in the original BERT paper, targeting Masked Language Modeling (MLM) and Next Sentence Prediction (NSP). It was trained for 1 million steps using over 20 GB of text. For more training details, please read the published paper. Similar to Bertimbau, it was pre - trained with the BrWAC dataset and Portuguese Wikipedia for the Tokenizer, with some improvements in the training flow:

- Removal of uncommon Portuguese characters from Tokenizer training: In Bertimbau, more than 7000 out of 29794 tokens use oriental or special characters rarely used in Portuguese. For example, tokens like "##漫", "##켝", "##前" exist. In BERTugues, we removed these characters before training the tokenizer.

- 😀 Addition of major Emojis to the Tokenizer: Wikipedia has few Emojis in its text, resulting in a low number of Emojis in the tokens. As demonstrated in the literature, they are important for a series of tasks.

- Quality filtering of BrWAC texts: We followed the heuristic model proposed in the Gopher model paper from Google to remove low - quality texts from BrWAC.

✨ Features

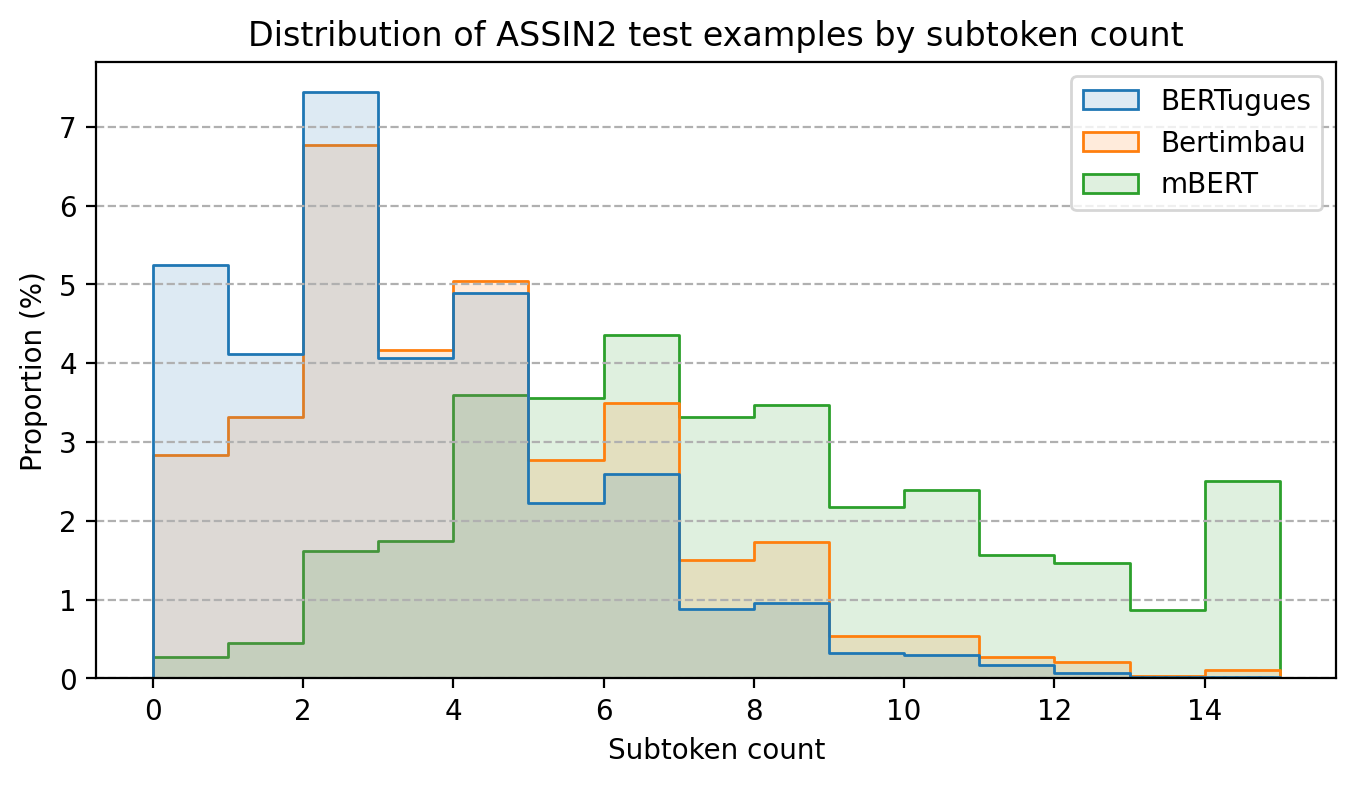

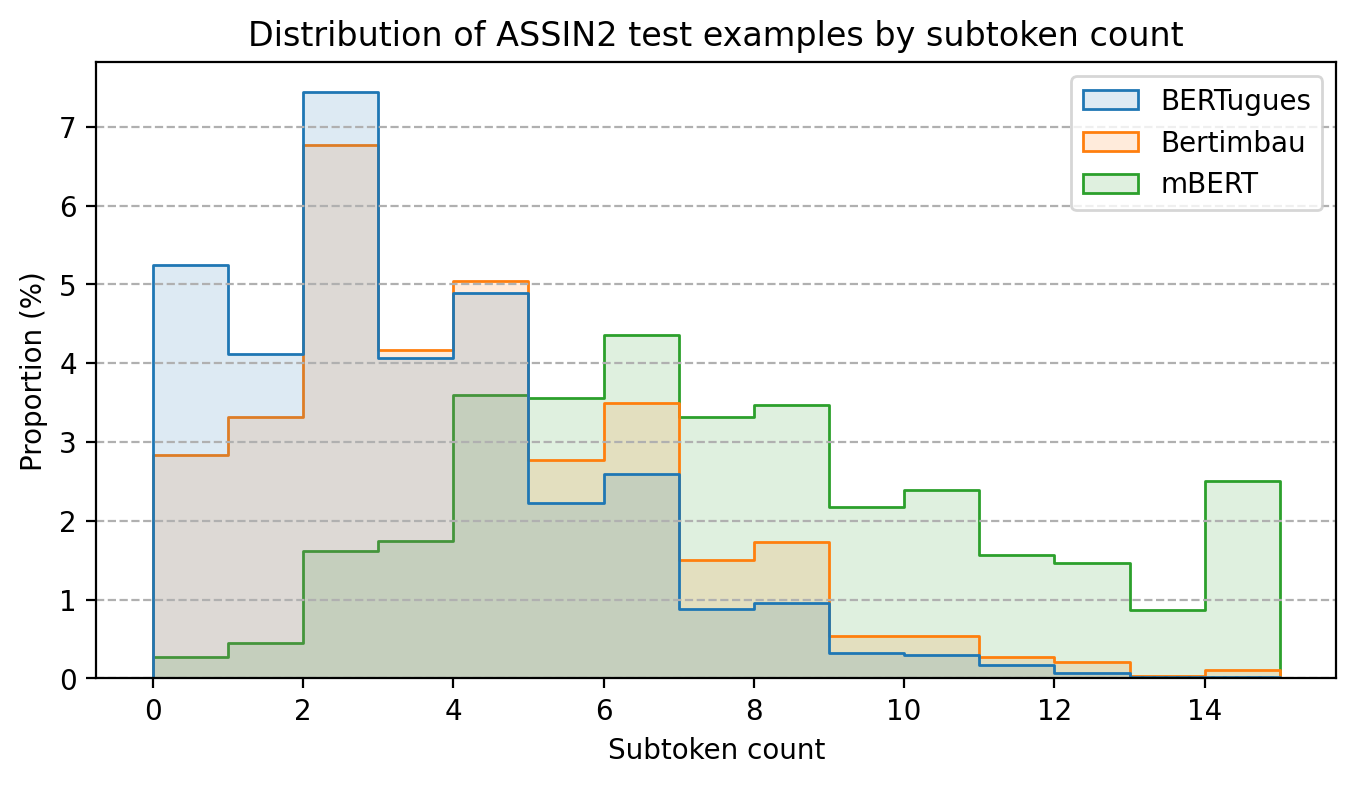

Tokenizer

By replacing rarely used Portuguese tokens, we reduced the average number of words split into multiple tokens. In the test using assin2, the same dataset used by Bertimbau in its master's thesis test, we reduced the average number of split words per text from 3.8 to 3.0. For multilingual BERT, this number was 7.4.

Performance

To compare performance, we tested a text classification problem using the movie review dataset from IMDB, which has been translated into Portuguese and has good quality. We used the BERTugues representation of the sentence and passed it through a Random Forest model for classification.

We also used the performance comparison from the paper JurisBERT: Transformer - based model for embedding legal texts, which pre - trains a BERT specifically for texts in a domain, using multilingual BERT and Bertimbau as baselines. In this case, we used the code provided by the paper team and added BERTugues. The model is used to compare whether two texts are about the same topic.

| Model |

IMDB (F1) |

STJ (F1) |

PJERJ (F1) |

TJMS (F1) |

Average F1 |

| Multilingual BERT |

72.0% |

30.4% |

63.8% |

65.0% |

57.8% |

| Bertimbau - Base |

82.2% |

35.6% |

63.9% |

71.2% |

63.2% |

| Bertimbau - Large |

85.3% |

43.0% |

63.8% |

74.0% |

66.5% |

| BERTugues - Base |

84.0% |

45.2% |

67.5% |

70.0% |

66.7% |

BERTugues outperformed Bertimbau - base in 3 out of 4 tasks and Bertimbau - Large in 2 out of 4 tasks. Bertimbau - Large is a much larger model (3 times more parameters) and computationally expensive.

💻 Usage Examples

Basic Usage

Many usage examples are available on our GitHub. Here are 2 examples for quick reference:

Masked Language Modeling

from transformers import BertTokenizer, BertForMaskedLM, pipeline

model = BertForMaskedLM.from_pretrained("ricardoz/BERTugues-base-portuguese-cased")

tokenizer = BertTokenizer.from_pretrained("ricardoz/BERTugues-base-portuguese-cased", do_lower_case=False)

pipe = pipeline('fill-mask', model=model, tokenizer=tokenizer, top_k = 3)

pipe('[CLS] Eduardo abriu os [MASK], mas não quis se levantar. Ficou deitado e viu que horas eram.')

Sentence Embedding

from transformers import BertTokenizer, BertModel, pipeline

import torch

model = BertModel.from_pretrained("ricardoz/BERTugues-base-portuguese-cased")

tokenizer = BertTokenizer.from_pretrained("ricardoz/BERTugues-base-portuguese-cased", do_lower_case=False)

input_ids = tokenizer.encode('[CLS] Eduardo abriu os olhos, mas não quis se levantar. Ficou deitado e viu que horas eram.', return_tensors='pt')

with torch.no_grad():

last_hidden_state = model(input_ids).last_hidden_state[:, 0]

last_hidden_state

📄 License

This model is under the "other" license.

📚 Documentation

Citation

If you use BERTugues in your publications, please cite it! It helps a lot in the recognition and appreciation of the model in the scientific community.

@article{Zago2024bertugues,

title = {BERTugues: A Novel BERT Transformer Model Pre-trained for Brazilian Portuguese},

volume = {45},

url = {https://ojs.uel.br/revistas/uel/index.php/semexatas/article/view/50630},

DOI = {10.5433/1679-0375.2024.v45.50630},

journal = {Semina: Ciências Exatas e Tecnológicas},

author = {Mazza Zago, Ricardo and Agnoletti dos Santos Pedotti, Luciane},

year = {2024},

month = {Dec.},

pages = {e50630}

}

More Information

For more information, please visit our GitHub!

Transformers

Transformers