🚀 ColQwen2: Visual Retriever based on Qwen2-VL-2B-Instruct with ColBERT strategy

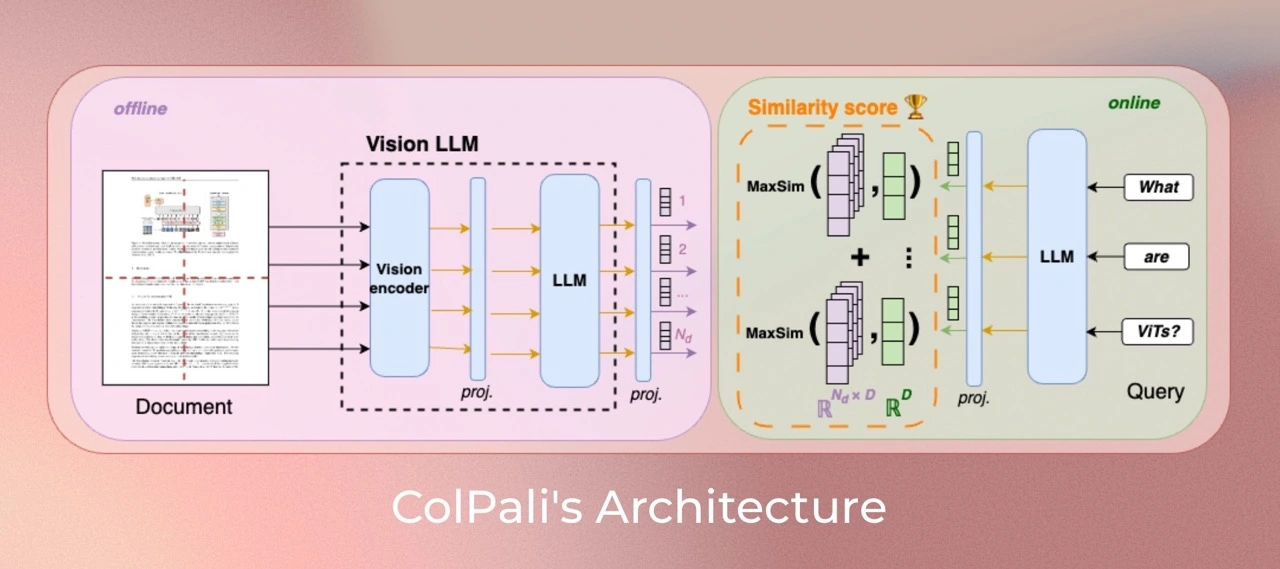

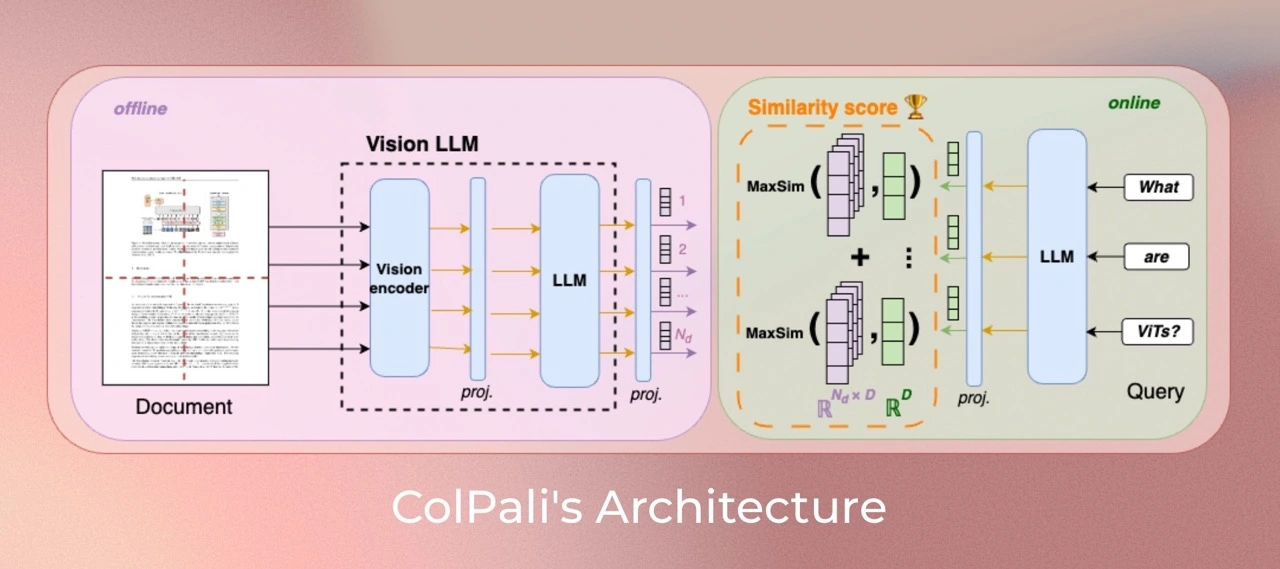

ColQwen is a model that leverages a novel model architecture and training strategy based on Vision Language Models (VLMs) to efficiently index documents from their visual features. It's an extension of Qwen2-VL-2B, generating ColBERT-style multi-vector representations of text and images. This model was introduced in the paper ColPali: Efficient Document Retrieval with Vision Language Models and first released in this repository.

This untrained base version ensures deterministic projection layer initialization.

✨ Features

Version specificity

This model accepts dynamic image resolutions without resizing, thus preserving their aspect ratio, unlike ColPali. The maximal resolution is set to create at most 768 image patches. Experiments indicate significant improvements with more image patches, though it increases memory requirements. This version is trained with colpali-engine==0.3.1 and uses the same data as described in the ColPali paper.

Model Training

Dataset

Our training dataset consists of 127,460 query - page pairs. It includes train sets from openly available academic datasets (63%) and a synthetic dataset composed of web - crawled PDF pages with pseudo - questions generated by VLM (Claude - 3 Sonnet) (37%). The training set is entirely in English, allowing us to study zero - shot generalization to non - English languages. We ensure no multi - page PDF document is used in both ViDoRe and the train set to avoid evaluation contamination. A 2% validation set is used for hyperparameter tuning.

Note: Multilingual data is present in the pretraining corpus of the language model and likely in the multimodal training.

Parameters

All models are trained for 1 epoch on the train set. Unless otherwise specified, we train models in bfloat16 format, using low - rank adapters (LoRA) with alpha = 32 and r = 32 on the transformer layers of the language model and the final randomly initialized projection layer. We use a paged_adamw_8bit optimizer. Training is done on an 8 - GPU setup with data parallelism, a learning rate of 5e - 5 with linear decay and 2.5% warmup steps, and a batch size of 32.

Limitations

- Focus: The model mainly targets PDF - type documents and high - resource languages, which may limit its generalization to other document types or less - represented languages.

- Support: The model relies on multi - vector retrieval from the ColBERT late interaction mechanism, which may require engineering efforts to adapt to widely used vector retrieval frameworks without native multi - vector support.

📦 Installation

Make sure colpali-engine is installed from source or with a version greater than 0.3.1. The transformers version must be > 4.45.0.

pip install git+https://github.com/illuin-tech/colpali

💻 Usage Examples

Basic Usage

import torch

from PIL import Image

from transformers.utils.import_utils import is_flash_attn_2_available

from colpali_engine.models import ColQwen2, ColQwen2Processor

model = ColQwen2.from_pretrained(

"vidore/colqwen2-v0.1",

torch_dtype=torch.bfloat16,

device_map="cuda:0",

attn_implementation="flash_attention_2" if is_flash_attn_2_available() else None,

).eval()

processor = ColQwen2Processor.from_pretrained("vidore/colqwen2-v0.1")

images = [

Image.new("RGB", (128, 128), color="white"),

Image.new("RGB", (64, 32), color="black"),

]

queries = [

"Is attention really all you need?",

"What is the amount of bananas farmed in Salvador?",

]

batch_images = processor.process_images(images).to(model.device)

batch_queries = processor.process_queries(queries).to(model.device)

with torch.no_grad():

image_embeddings = model(**batch_images)

query_embeddings = model(**batch_queries)

scores = processor.score_multi_vector(query_embeddings, image_embeddings)

📄 License

ColQwen2's vision language backbone model (Qwen2 - VL) is under the apache2.0 license. The adapters attached to the model are under the MIT license.

📚 Documentation

Contact

- Manuel Faysse: manuel.faysse@illuin.tech

- Hugues Sibille: hugues.sibille@illuin.tech

- Tony Wu: tony.wu@illuin.tech

Citation

If you use any datasets or models from this organization in your research, please cite the original dataset as follows:

@misc{faysse2024colpaliefficientdocumentretrieval,

title={ColPali: Efficient Document Retrieval with Vision Language Models},

author={Manuel Faysse and Hugues Sibille and Tony Wu and Bilel Omrani and Gautier Viaud and Céline Hudelot and Pierre Colombo},

year={2024},

eprint={2407.01449},

archivePrefix={arXiv},

primaryClass={cs.IR},

url={https://arxiv.org/abs/2407.01449},

}

| Property |

Details |

| Library Name |

colpali |

| Base Model |

vidore/colqwen2-base |

| New Version |

vidore/colqwen2-v1.0 |

| Pipeline Tag |

visual-document-retrieval |

| License |

apache-2.0 |

Transformers

Transformers