🚀 BLIP-2, OPT-6.7b, pre-trained only

BLIP-2 model leveraging OPT-6.7b, a large language model with 6.7 billion parameters, for image-to-text tasks.

🚀 Quick Start

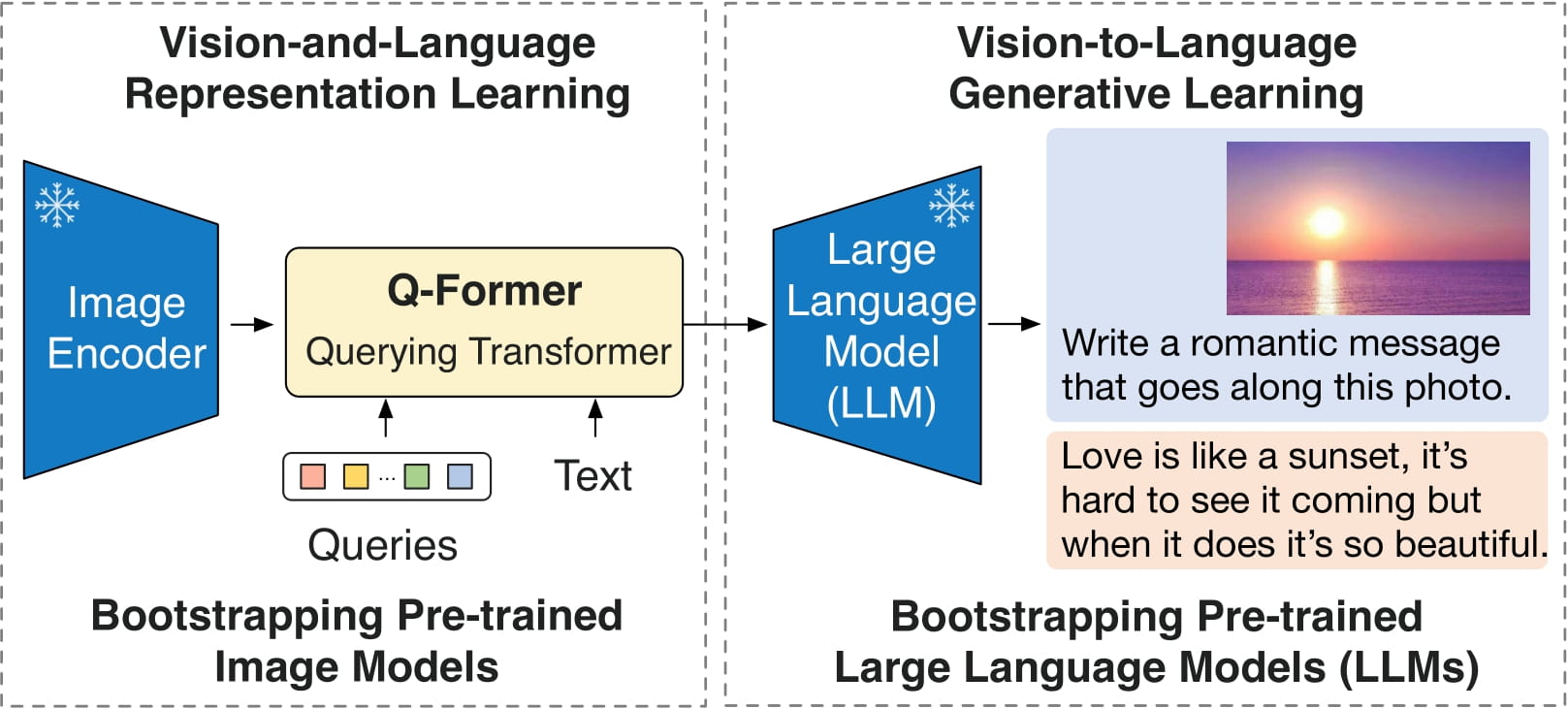

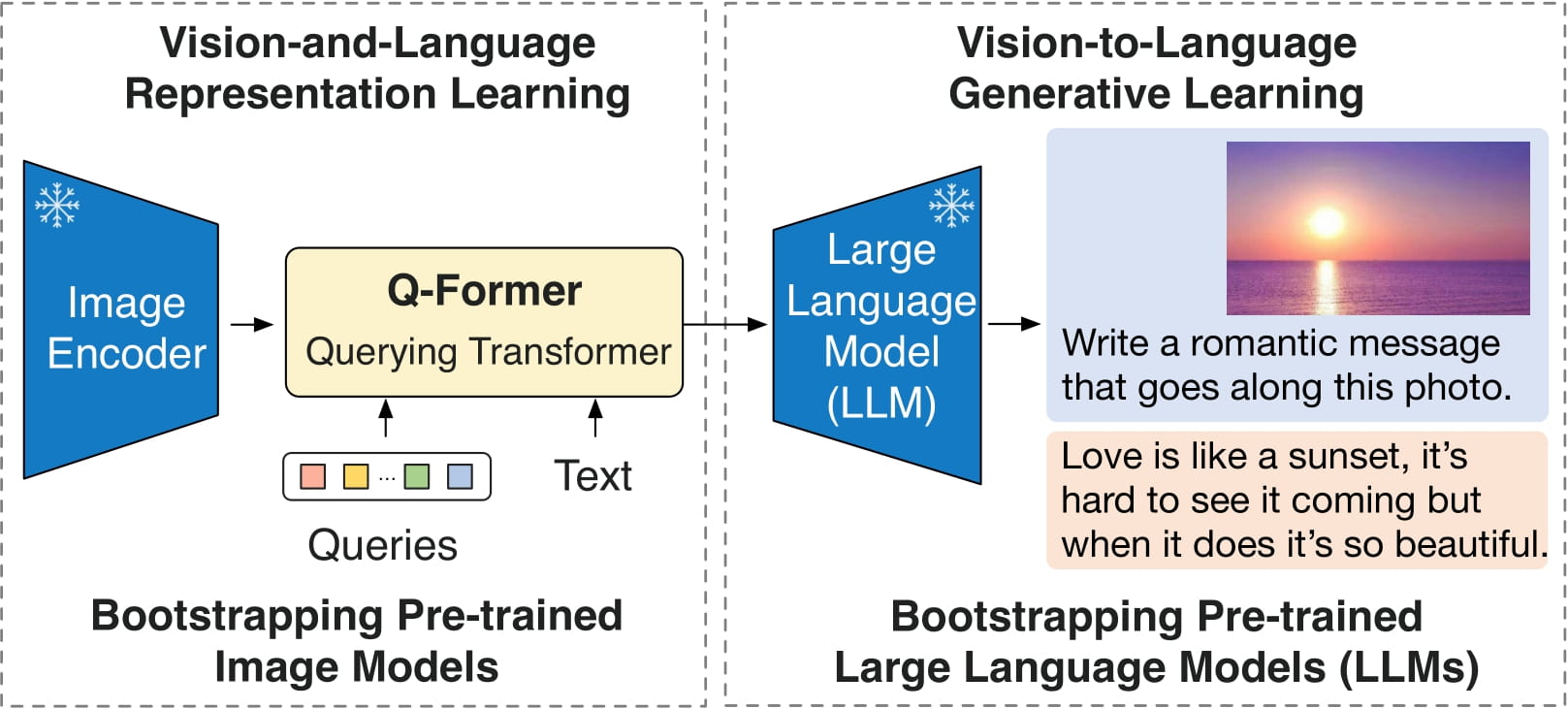

BLIP-2 is a powerful model that combines an image encoder, a Querying Transformer (Q-Former), and a large language model. It can be used for various tasks such as image captioning, visual question answering, and chat-like conversations.

✨ Features

- Multi - task Capability: Can be used for image captioning, visual question answering (VQA), and chat - like conversations.

- Bridge between Image and Language: The Querying Transformer bridges the gap between the image encoder and the large language model.

📚 Documentation

Model description

BLIP-2 consists of 3 models: a CLIP - like image encoder, a Querying Transformer (Q - Former) and a large language model.

The authors initialize the weights of the image encoder and large language model from pre - trained checkpoints and keep them frozen while training the Querying Transformer, which is a BERT - like Transformer encoder that maps a set of "query tokens" to query embeddings, which bridge the gap between the embedding space of the image encoder and the large language model.

The goal for the model is simply to predict the next text token, giving the query embeddings and the previous text.

This allows the model to be used for tasks like:

- image captioning

- visual question answering (VQA)

- chat - like conversations by feeding the image and the previous conversation as prompt to the model

Direct Use and Downstream Use

You can use the raw model for conditional text generation given an image and optional text. See the model hub to look for fine - tuned versions on a task that interests you.

Bias, Risks, Limitations, and Ethical Considerations

BLIP2 - OPT uses off - the - shelf OPT as the language model. It inherits the same risks and limitations as mentioned in Meta's model card.

Like other large language models for which the diversity (or lack thereof) of training

data induces downstream impact on the quality of our model, OPT - 175B has limitations in terms

of bias and safety. OPT - 175B can also have quality issues in terms of generation diversity and

hallucination. In general, OPT - 175B is not immune from the plethora of issues that plague modern

large language models.

BLIP2 is fine - tuned on image - text datasets (e.g. [LAION](https://laion.ai/blog/laion - 400 - open - dataset/)) collected from the internet. As a result the model itself is potentially vulnerable to generating equivalently inappropriate content or replicating inherent biases in the underlying data.

BLIP2 has not been tested in real world applications. It should not be directly deployed in any applications. Researchers should first carefully assess the safety and fairness of the model in relation to the specific context they’re being deployed within.

How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/blip - 2#transformers.Blip2ForConditionalGeneration.forward.example).

📄 License

This project is licensed under the MIT License.