🚀 Model Card for Segment Anything Model (SAM) - ViT Base (ViT-B) version, fine-tuned for medical image segmentation

This model is fine - tuned for medical image segmentation, aiming to solve the challenges in medical image analysis and provide high - quality segmentation results, which is of great value for medical research and diagnosis.

🚀 Quick Start

This model card provides detailed information about the Segment Anything Model (SAM) fine - tuned for medical image segmentation. You can quickly understand the model's key points, details, usage, and citation information through the following content.

✨ Features

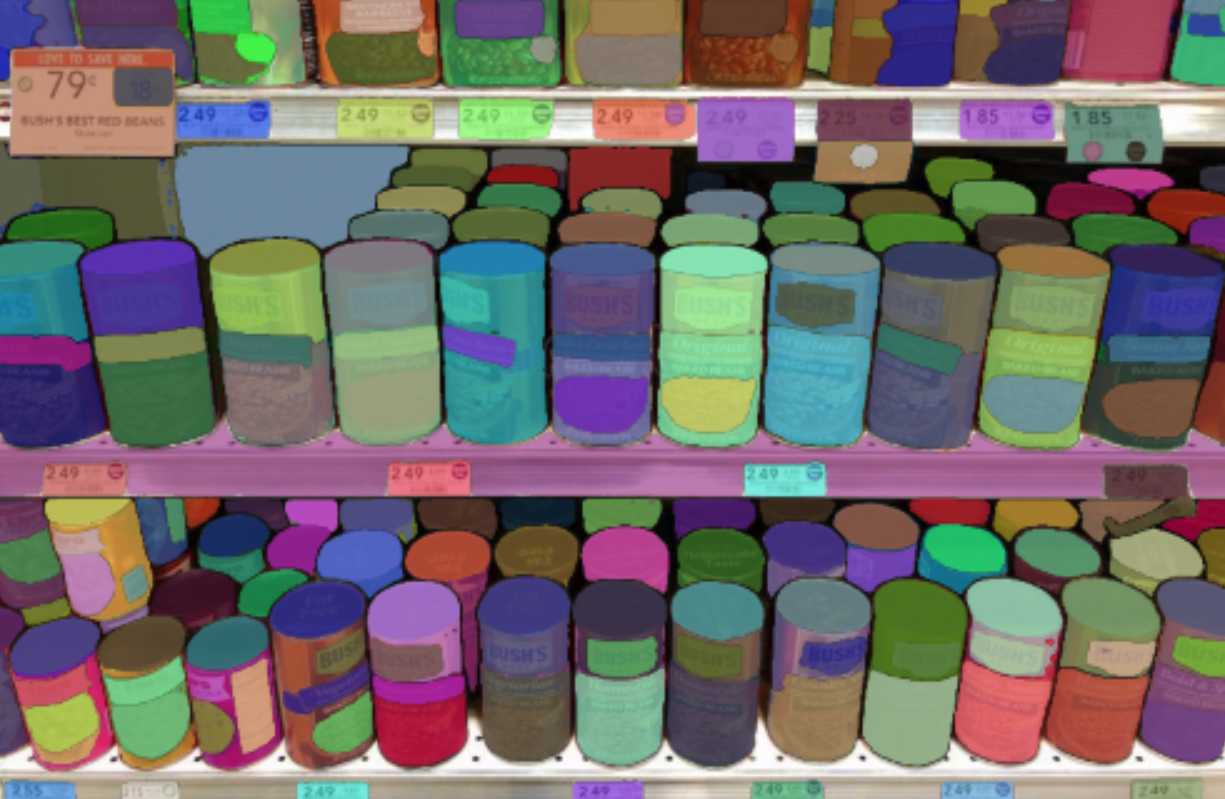

- High - quality segmentation: The Segment Anything Model (SAM) can produce high - quality object masks from input prompts such as points or boxes, and can generate masks for all objects in an image.

- Strong zero - shot performance: Trained on a large - scale dataset of 11 million images and 1.1 billion masks, it has strong zero - shot performance on a variety of segmentation tasks.

- Prompt - based design: The model is designed and trained to be promptable, enabling it to transfer zero - shot to new image distributions and tasks.

📚 Documentation

Model Details

The SAM model consists of 3 main modules:

- VisionEncoder: A VIT - based image encoder that computes image embeddings using attention on patches of the image with Relative Positional Embedding.

- PromptEncoder: Generates embeddings for points and bounding boxes.

- MaskDecoder: A two - ways transformer that performs cross - attention between the image embedding and the point embeddings and vice - versa. The outputs are then processed.

- Neck: Predicts the output masks based on the contextualized masks produced by the

MaskDecoder.

Detailed architecture of Segment Anything Model (SAM).

Detailed architecture of Segment Anything Model (SAM).

Table of Contents

- TL;DR

- Model Details

- Usage

- Citation

TL;DR

Link to original SAM repository

Link to original MedSAM repository

The Segment Anything Model (SAM) produces high - quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image. It has been trained on a dataset of 11 million images and 1.1 billion masks, and has strong zero - shot performance on a variety of segmentation tasks.

The abstract of the paper states:

We introduce the Segment Anything (SA) project: a new task, model, and dataset for image segmentation. Using our efficient model in a data collection loop, we built the largest segmentation dataset to date (by far), with over 1 billion masks on 11M licensed and privacy respecting images. The model is designed and trained to be promptable, so it can transfer zero - shot to new image distributions and tasks. We evaluate its capabilities on numerous tasks and find that its zero - shot performance is impressive -- often competitive with or even superior to prior fully supervised results. We are releasing the Segment Anything Model (SAM) and corresponding dataset (SA - 1B) of 1B masks and 11M images at https://segment-anything.com to foster research into foundation models for computer vision.

Disclaimer: Content from this model card has been written by the Hugging Face team, and parts of it were copy pasted from the original SAM model card.

Usage

Refer to the demo notebooks:

- this one showcasing inference with MedSAM

- this one showcasing general usage of SAM,

as well as the docs.

Citation

If you use this model, please use the following BibTeX entry.

@article{kirillov2023segany,

title={Segment Anything},

author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C. and Lo, Wan - Yen and Doll{\'a}r, Piotr and Girshick, Ross},

journal={arXiv:2304.02643},

year={2023}

}

📄 License

This model is licensed under the Apache - 2.0 license.

Detailed architecture of Segment Anything Model (SAM).

Detailed architecture of Segment Anything Model (SAM).