🚀 MiniCPM-V-2_6-RK3588-1.1.4

This version of MiniCPM-V-2_6 is optimized for RK3588 NPU, offering high performance with specific quantization.

Dataset and Language Information

| Property |

Details |

| Datasets |

openbmb/RLAIF-V-Dataset |

| Language |

Multilingual |

| Library Name |

transformers |

| Pipeline Tag |

image-text-to-text |

| Tags |

minicpm-v, vision, ocr, multi-image, video, custom_code |

🚀 Quick Start

This version of MiniCPM-V-2_6 has been converted to run on the RK3588 NPU using ['w8a8', 'w8a8_g128', 'w8a8_g256', 'w8a8_g512'] quantization. This model has been optimized with the following LoRA and is compatible with RKLLM version 1.1.4.

Useful Links

✨ Features

Original Model Card for MiniCPM-V-2_6

MiniCPM-V 2.6 is the latest and most capable model in the MiniCPM-V series. Built on SigLip-400M and Qwen2-7B with a total of 8B parameters, it offers significant performance improvements and new features.

- 🔥 Leading Performance: Achieves an average score of 65.2 on the latest OpenCompass, surpassing popular proprietary models like GPT-4o mini, GPT-4V, Gemini 1.5 Pro, and Claude 3.5 Sonnet for single image understanding with only 8B parameters.

- 🖼️ Multi Image Understanding and In-context Learning: Can perform conversation and reasoning over multiple images, achieving state-of-the-art performance on multi-image benchmarks and showing promising in-context learning capability.

- 🎬 Video Understanding: Accepts video inputs, performing conversation and providing dense captions for spatial-temporal information. Outperforms GPT-4V, Claude 3.5 Sonnet, and LLaVA-NeXT-Video-34B on Video-MME with/without subtitles.

- 💪 Strong OCR Capability and Others: Can process images with any aspect ratio and up to 1.8 million pixels, achieving state-of-the-art performance on OCRBench. Features trustworthy behaviors with lower hallucination rates and supports multilingual capabilities.

- 🚀 Superior Efficiency: Shows state-of-the-art token density, improving inference speed, latency, memory usage, and power consumption. Can efficiently support real-time video understanding on end-side devices like iPad.

- 💫 Easy Usage: Can be used in various ways, including local CPU inference, quantized models in different formats, high-throughput inference, fine-tuning, local WebUI demo setup, and online web demo.

Evaluation

Single Image Results

* Evaluated using chain-of-thought prompting.

+ Token Density: number of pixels encoded into each visual token at maximum resolution.

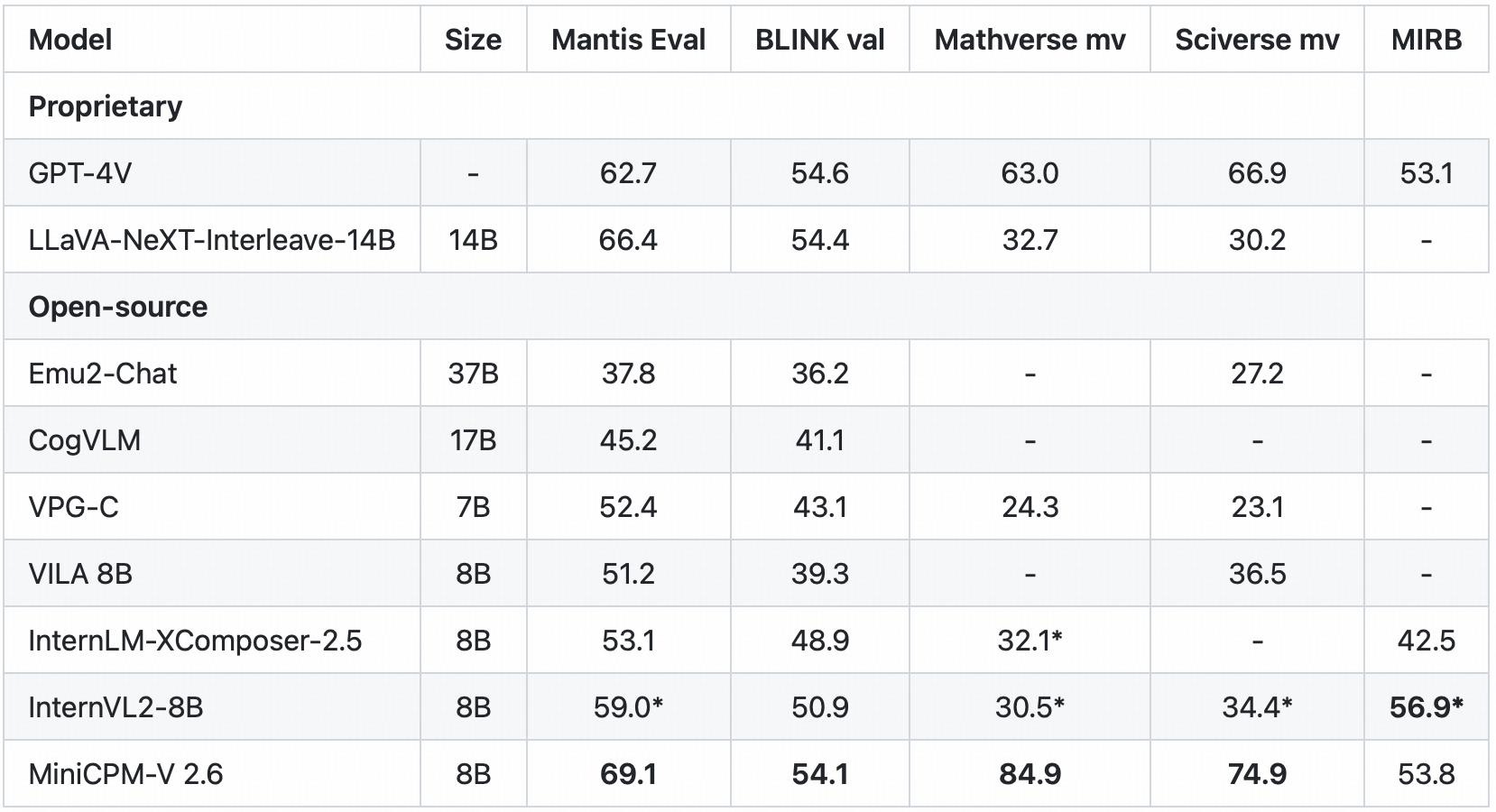

Multi-image Results

* Evaluated the officially released checkpoint by ourselves.

Video Results

Click to view few-shot results on TextVQA, VizWiz, VQAv2, OK-VQA.

* denotes zero image shot and two additional text shots following Flamingo.

+ Evaluated the pretraining ckpt without SFT.

Examples

Click to view more cases.

The model is deployed on end devices. The demo video is a raw screen recording on an iPad Pro without edition.

📚 Documentation

Demo

Click here to try the Demo of MiniCPM-V 2.6.

💻 Usage Examples

Basic Usage

Inference using Huggingface transformers on NVIDIA GPUs. Requirements tested on python 3.10:

Pillow==10.1.0

torch==2.1.2

torchvision==0.16.2

transformers==4.40.0

sentencepiece==0.1.99

decord

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

attn_implementation='sdpa', torch_dtype=torch.bfloat16)

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

image = Image.open('xx.jpg').convert('RGB')

question = 'What is in the image?'

msgs = [{'role': 'user', 'content': [image, question]}]

res = model.chat(

image=None,

msgs=msgs,

tokenizer=tokenizer

)

print(res)

res = model.chat(

image=None,

msgs=msgs,

tokenizer=tokenizer,

sampling=True,

stream=True

)

generated_text = ""

for new_text in res:

generated_text += new_text

print(new_text, flush=True, end='')

Advanced Usage

Chat with Multiple Images

Click to show Python code running MiniCPM-V 2.6 with multiple images input.

```python

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

image1 = Image.open('image1.jpg').convert('RGB')

image2 = Image.open('image2.jpg').convert('RGB')

question = 'Compare image 1 and image 2, tell me about the differences between image 1 and image 2.'

msgs = [{'role': 'user', 'content': [image1, image2, question]}]

answer = model.chat(

image=None,

msgs=msgs,

tokenizer=tokenizer

)

print(answer)

</details>

#### In-context Few-shot Learning

<details>

<summary> Click to view Python code running MiniCPM-V 2.6 with few-shot input. </summary>

```python

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

question = "production date"

image1 = Image.open('example1.jpg').convert('RGB')

answer1 = "2023.08.04"

image2 = Image.open('example2.jpg').convert('RGB')

answer2 = "2007.04.24"

image_test = Image.open('test.jpg').convert('RGB')

msgs = [

{'role': 'user', 'content': [image1, question]}, {'role': 'assistant', 'content': [answer1]},

{'role': 'user', 'content': [image2, question]}, {'role': 'assistant', 'content': [answer2]},

{'role': 'user', 'content': [image_test, question]}

]

answer = model.chat(

image=None,

msgs=msgs,

tokenizer=tokenizer

)

print(answer)

Chat with Video

Click to view Python code running MiniCPM-V 2.6 with video input.

```python

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

from decord import VideoReader, cpu # pip install decord

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True,

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-2_6', trust_remote_code=True)

MAX_NUM_FRAMES=64 # if cuda OOM set a smaller number

def encode_video(video_path):

def uniform_sample(l, n):

gap = len(l) / n

idxs = [int(i * gap + gap / 2) for i in range(n)]

return [l[i] for i in idxs]

vr = VideoReader(video_path, ctx=cpu(0))

sample_fps = round(vr.get_avg_fps() / 1) # FPS

frame_idx = [i for i in range(0, len(vr), sample_fps)]

# The original README seems to be incomplete here, so it remains as is.